This thread marks the formal launch of “Finding primes” as the massively collaborative research project Polymath4, and now supersedes the proposal thread for this project as the official “research” thread for this project, which has now become rather lengthy. (Simultaneously with this research thread, we also have the discussion thread to oversee the research thread and to provide a forum for casual participants, and also the wiki page to store all the settled knowledge and accumulated insights gained from the project to date.) See also this list of general polymath rules.

The basic problem we are studying here can be stated in a number of equivalent forms:

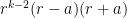

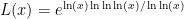

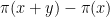

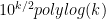

Problem 1. (Finding primes) Find a deterministic algorithm which, when given an integer k, is guaranteed to locate a prime of at least k digits in length in as quick a time as possible (ideally, in time polynomial in k, i.e. after

steps).

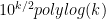

Problem 2. (Finding primes, alternate version) Find a deterministic algorithm which, after running for k steps, is guaranteed to locate as large a prime as possible (ideally, with a polynomial number of digits, i.e. at least

digits for some

.)

To make the problem easier, we will assume the existence of a primality oracle, which can test whether any given number is prime in O(1) time, as well as a factoring oracle, which will provide all the factors of a given number in O(1) time. (Note that the latter supersedes the former.) The primality oracle can be provided essentially for free, due to polynomial-time deterministic primality algorithms such as the AKS primality test; the factoring oracle is somewhat more expensive (there are deterministic factoring algorithms, such as the quadratic sieve, which are suspected to be subexponential in running time, but no polynomial-time algorithm is known), but seems to simplify the problem substantially.

The problem comes in at least three forms: a strong form, a weak form, and a very weak form.

- Strong form: Deterministically find a prime of at least k digits in poly(k) time.

- Weak form: Deterministically find a prime of at least k digits in

time, or equivalently find a prime larger than

in time O(k) for any fixed constant C.

- Very weak form: Deterministically find a prime of at least k digits in significantly less than

time, or equivalently find a prime significantly larger than

in time O(k).

The pr0blem in all of these forms remain open, even assuming a factoring oracle and strong number-theoretic hypotheses such as GRH. One of the main difficulties is that we are seeking a deterministic guarantee that the algorithm works in all cases, which is very different from a heuristic argument that the algorithm “should” work in “most” cases. (Note that there are already several efficient probabilistic or heuristic prime generation algorithms in the literature, e.g. this one, which already suffice for all practical purposes; the question here is purely theoretical.) In other words, rather than working in some sort of “average-case” environment where probabilistic heuristics are expected to be valid, one should instead imagine a “Murphy’s law” or “worst-case” scenario in which the primes are situated in a “maximally unfriendly” manner. The trick is to ensure that the algorithm remains efficient and successful even in the worst-case scenario.

Below the fold, we will give some partial results, and some promising avenues of attack to explore. Anyone is welcome to comment on these strategies, and to propose new ones. (If you want to participate in a more “casual” manner, you can ask questions on the discussion thread for this project.)

Also, if anything from the previous thread that you feel is relevant has been missed in the text below, please feel free to recall it in the comments to this thread.

— Partial results —

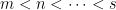

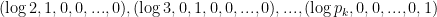

One way to proceed is to find a sparse explicitly enumerable set of large integers which is guaranteed to contain a prime. One can then query each element of that set in turn using the primality oracle to then find a large prime.

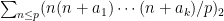

For instance, Bertrand’s postulate shows that there is at least one prime of k digits in length, which can then be located in time. A result of Baker and Harman shows that there is a prime between n and

for all sufficiently large n, so by using the interval

one can find a k-digit prime in

time. This is currently the best result known unconditionally. Assuming the Riemann hypothesis, there is a prime between n and

, giving the slight improvement to

. (Assuming GRH, it seems that one may be able to improve this a little bit using the W-trick, but this has not been checked.) There is a conjecture of Cramer that the largest prime gap between primes of size n is

which would give a substantial improvement, to

, thus solving the strong form of the conjecture, but we have no idea how to establish this conjecture though it is heuristically plausible.

In a slightly different direction, there is a result of Friedlander and Iwaniec that there are infinitely many primes of the form , and in fact their argument shows that there is a k-digit prime of this form for all large k. This gives a run time of

. A subsequent result of Heath-Brown achieves a similar result for primes of the form

, which gives a slightly better run time of

, but this is still inferior to the Baker-Harman bound.

Strategy 1: Find some even sparser sets than these for which we actually have a chance of proving that the set captures infinitely many primes.

(For instance, assuming a sufficiently quantitative version of Schinzel’s hypothesis H, one would be able to answer the weak form of the problem, though this hypothesis is far from being resolved in general. More generally, there are many sparse sets which heuristically have an extremely good chance of capturing a lot of primes, but the trick is to find a set for which we can provably establish the existence of primes.)

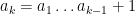

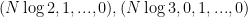

Another approach is based on variants of Euclid’s proof of the infinitude of primes and rely on the factoring oracle. For instance, one can generate an infinite sequence of primes recursively by setting each

to be the largest prime factor of

(here we use the factoring oracle). After k steps, this is guaranteed to generate a prime which is as large as the

prime, which is about

by the prime number theorem. (In general, it is likely that one would find much larger primes than this, but remember that we are trying to control the worst-case scenario, not the average-case one.)

Strategy 2. Adapt the Euclid-style algorithms to get larger primes than this in the worst-case scenario.

For comparison, note that the Riemann hypothesis argument given above would give a prime of size about or more in k steps.

A third strategy is to look for numbers that are not S-smooth for some threshold S, i.e. contain at least one prime factor larger than S. Factoring such a number will clearly generate a prime larger than S. (Furthermore, if the non-S-smooth number is of size less than , it must in fact be prime.) One strategy is to scan an interval [n, n+a] for non-smooth numbers, thus leading to

Problem 3. For which n, a, S can we rigorously show that [n,n+a] contains at least one non-S-smooth number?

If we can make S much larger than a, then we are in business (e.g. if we can make S larger than , then we will beat the RH bound). Unfortunately, if S is larger than a, we know that [0,a] does not contain any non-S-smooth number, which makes it difficult to see how to show that [n,n+a] will contain any non-S-smooth numbers, since sieve theory techniques are generally insensitive to the starting point of an interval.

One interesting proposal to do this, raised by Tim Gowers, is to try to use additive combinatorics. Let J be a set of primes larger than S (e.g. the primes between S and 2S), and let K be the set of logarithms of J. If we can show that one of the iterated sumsets intersects the interval

, then we have shown that [n,n+a] contains at least one non-S-smooth number. The hope is that additive combinatorial methods can provide some dispersal and mixing properties of these iterated sumsets which will exclude a “Murphy’s law” type scenario in which the interval

is always avoided.

Strategy 3. Find good values of n, a, S for which the above argument has a reasonable chance of working.

A fourth strategy would be to try to generate pseudoprimes rather than primes, e.g. to generate numbers obeying congruences such as . It is not clear though how to efficiently achieve this, especially in the worst-case scenario.

A fifth strategy would be to try to understand the following question:

Problem 4. Given a set A of numbers, what is an efficient way to deterministically find a k-digit number which is not divisible by any element of A?

Note that Problem 1 is basically the special case of Problem 4 when A is equal to all the natural numbers (or primes) less than .

A last minute addition to the above strategies, suggested by Ernie Croot:

Strategy 6. Assume the existence of a Siegel zero at some modulus M, which implies that the Jacobi symbol

is equal to -1 for all small primes (say

). Can one use this sort of information to locate a large prime, perhaps by using the quadratic form

? Note that M might not be known in advance. This could lead to a non-trivial disjunction: either we can solve the primes problem, or we can assume that there are no Siegel zeroes in a certain range.

There may be additional viable strategies beyond the above ones. Please feel free to share your thoughts, but remember that we will eventually need a rigorous worst-case analysis for any proposed strategy; heuristics are not sufficient by themselves to resolve the problem.

— Other variants —

There are some variants of the problem that have already been solved, for instance finding irreducible polynomials of a certain degree over a finite field is easy, as is finding square-free numbers of a certain size. It may be worth looking for additional variant problems which are easier but are not yet solved.

We have an argument that shows that in the presence of some oracles, and replacing primes by a similarly dense set of “pseudoprimes”, the problem cannot be solved. It may be of interest to refine this argument to show that even if one assumes complexity-theoretic conjectures such as P=BPP, the general problem of finding pseudoprimes is not efficiently solvable. (We know that the problem is solvable though if P=NP.)

The following toy problem has been advanced:

Problem 5. (Finding consecutive square-free numbers) Find a deterministic algorithm which, when given an integer k, is guaranteed to locate a pair n,n+1 of consecutive square-free numbers of at least k digits in length in as quick a time as possible.

Note that finding one large square-free number is easy: just multiply lots of distinct small primes together. Also, as the density of square-free numbers is , a counting argument shows that pairs of consecutive square-free numbers exist in abundance, and are thus easy to find probabilistically. Testing a number for square-freeness is trivial with a factoring oracle. (Question: is there a deterministic polynomial-time algorithm to test a k-digit number for square-freeness without assuming any oracles?)

For Question 6, it has been suggested that Sylvester’s sequence may be a good candidate; interestingly, this sequence has also been proposed for Strategy 2.

[…] primes” has now officially launched at the polymath blog as Polymath4, with the opening of a fresh research thread to discuss the following problem: Problem 1. Find a deterministic algorithm which, when given an […]

Pingback by Polymath4 (”Finding Primes”) now officially active « What’s new — August 9, 2009 @ 4:08 am |

While scanning through the literature on Sylvester’s sequence, I found the following theorem of Odoni: The number of primes less than that divide some Sylvester number is

that divide some Sylvester number is  , where

, where  denotes a triple iterated logarithm. This should give a (very) slight improvement to strategy 2, namely we should be guaranteed to find a prime of size

denotes a triple iterated logarithm. This should give a (very) slight improvement to strategy 2, namely we should be guaranteed to find a prime of size  in

in  steps. Of course, this is still far from our best method. Odoni’s paper is: On the prime divisors of the sequence wn+1 = 1+ w1 · · ·wn. J. London Math. Soc. (2), 32(1):1–11, 1985.

steps. Of course, this is still far from our best method. Odoni’s paper is: On the prime divisors of the sequence wn+1 = 1+ w1 · · ·wn. J. London Math. Soc. (2), 32(1):1–11, 1985.

Comment by Mark Lewko — August 9, 2009 @ 4:20 am |

That’s interesting… it suggests a new strategy:

Strategy 7: Find an explicit sequence of mutually coprime numbers , such that the number of primes less than n that divide any element of the sequence is significantly less than

, such that the number of primes less than n that divide any element of the sequence is significantly less than  .

.

If, for instance, only primes less than n divide an element of the sequence, then we can find a prime of size about

primes less than n divide an element of the sequence, then we can find a prime of size about  by factoring

by factoring  . Having

. Having  less than 0.535 would beat our current unconditional world record, but any

less than 0.535 would beat our current unconditional world record, but any  would be interesting, I think.

would be interesting, I think.

Comment by Terence Tao — August 9, 2009 @ 4:33 am |

Here’s a thought. Any square-free number which is the sum of two squares can’t have any prime factor equal to 3 mod 4, which already knocks out half the primes from contention.

More generally, if a square-free number is of the form , then it can’t have any prime factor with respect to which -k is not a square. So, if one can find a square-free number that has many representations of the form

, then it can’t have any prime factor with respect to which -k is not a square. So, if one can find a square-free number that has many representations of the form  for various values of k, its prime factors have to avoid a large number of residue classes (by quadratic reciprocity) and are thus forced to lie in a sparse set. If one can then find many coprime numbers of this form, one may begin to find large prime factors, following Strategy 7.

for various values of k, its prime factors have to avoid a large number of residue classes (by quadratic reciprocity) and are thus forced to lie in a sparse set. If one can then find many coprime numbers of this form, one may begin to find large prime factors, following Strategy 7.

Admittedly, we’re already struggling to generate square-free numbers with extra properties, but suppose we ignore that issue for the moment – is it easy to generate numbers which are simultaneously representable by a large number of quadratic forms?

Comment by Terence Tao — August 9, 2009 @ 5:26 am |

I’m not sure what you mean – any number has that form for any

has that form for any  , with

, with  . There are other

. There are other  whenever

whenever  has a square factor.

has a square factor.

This reminds me of OEIS sequence A074885, of numbers for which all positive numbers

for which all positive numbers  are square-free. There seem to be exactly 436 of these numbers.

are square-free. There seem to be exactly 436 of these numbers.

Comment by Michael Peake — August 9, 2009 @ 12:48 pm |

Oh, I was thinking of keeping k fixed and small.

For instance, given an integer n of the form a^2+3b^2, quadratic reciprocity tells us that every prime factor of n (other than 3) that doesn’t divide it twice is equal to 1 mod 3. (In particular, if n is square-free, every prime factor is of this form).

Thus, if we can find n which is of the form a^2+3b^2 and also of the form c^2+d^2, and is square-free, larger than 6, then it has a prime factor which is both 1 mod 4 and 1 mod 3, i.e. 1 mod 12. So if one can generate a lot of coprime numbers of this form and factor them, one generates primes in a mildly sparse sequence (1/4 of all primes). If one could keep doing this with more and more quadratic forms then one starts getting a reasonably sparse sequence of primes…

Comment by Terence Tao — August 9, 2009 @ 2:33 pm |

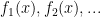

It seems like the easiest “polynomial” way to do this is to take integer values of the cyclotomic polynomials, e.g. integer values of have only prime factors dividing

have only prime factors dividing  or congruent to

or congruent to  . Formulas (32) and (33) on the Mathworld article make it possible to recursively compute, say,

. Formulas (32) and (33) on the Mathworld article make it possible to recursively compute, say,  for

for  the product of the first

the product of the first  primes given

primes given  .

.

Unfortunately, this runs into the same problem of feeding double-exponential numbers into the factoring oracle mentioned below, since the Fermat numbers are just . To make this feasible one would have to be able to get a lot of information by taking many integer values of a cyclotomic polynomial of reasonably small degree. Alternately one could homogenize into a polynomial of two variables, mimicking the quadratic form case, and take many small coprime values of the variables. I don’t know if there’s a good theory of simultaneous representation by quadratic forms in the literature, but then again I’m not really a number-theorist.

. To make this feasible one would have to be able to get a lot of information by taking many integer values of a cyclotomic polynomial of reasonably small degree. Alternately one could homogenize into a polynomial of two variables, mimicking the quadratic form case, and take many small coprime values of the variables. I don’t know if there’s a good theory of simultaneous representation by quadratic forms in the literature, but then again I’m not really a number-theorist.

Comment by Qiaochu Yuan — August 9, 2009 @ 4:19 pm |

Are the cyclotomic polynomials (say, ) efficiently computable (i.e., polynomial-time in the length of k) in general? The advantage of the Fermat numbers, at least, is that one can use repeated squaring to cut the number of multiplications down to k (and if there is a way to get around the double-exponential problem, this’ll likely be at its heart), but in general

) efficiently computable (i.e., polynomial-time in the length of k) in general? The advantage of the Fermat numbers, at least, is that one can use repeated squaring to cut the number of multiplications down to k (and if there is a way to get around the double-exponential problem, this’ll likely be at its heart), but in general  has as many as

has as many as  terms, and is irreducible, so repeated squaring doesn’t obviously apply.

terms, and is irreducible, so repeated squaring doesn’t obviously apply.

Comment by Harrison — August 9, 2009 @ 4:44 pm |

Formulas (32) and (33) take the place of repeated squaring, but the number of factors in the final product is the number of prime factors of . I think one still gets an essentially polynomial-time computation though, especially as the computations involved in many of the factors can be recycled. (This is all assuming we used the W-trick or some variant thereof, so we know the factorization of

. I think one still gets an essentially polynomial-time computation though, especially as the computations involved in many of the factors can be recycled. (This is all assuming we used the W-trick or some variant thereof, so we know the factorization of  .)

.)

So combining some observations it may or may not be a good idea to compute something like (the homogeneous version). Unfortunately this is still essentially double-exponential as far as I can tell.

(the homogeneous version). Unfortunately this is still essentially double-exponential as far as I can tell.

Comment by Qiaochu Yuan — August 9, 2009 @ 6:40 pm |

I don’t think we quite have , but it's known that the prime divisors of Fermat numbers

, but it's known that the prime divisors of Fermat numbers  are fairly sparse, since the sum of their reciprocals converges.

are fairly sparse, since the sum of their reciprocals converges.

But actually we don’t even need that result — it’s a very, very old theorem of Euler that all the prime factors of the nth Fermat number are at least exponential in n! (In particular, they have the form .) The problem, of course, is again that Fermat numbers are exponentially long, and it doesn’t seem possible to get around the double-exponential bind. (Although I’d be thrilled to be wrong about that!)

.) The problem, of course, is again that Fermat numbers are exponentially long, and it doesn’t seem possible to get around the double-exponential bind. (Although I’d be thrilled to be wrong about that!)

Comment by Harrison — August 9, 2009 @ 3:30 pm |

The Křížek, Luca and Somer result (On the convergence of series of reciprocals of primes related to the Fermat numbers, J. Number Theory 97(2002), 95–112) actually establishes that the set of primes less than that divide some Fermat number is

that divide some Fermat number is  . Moreover, Fermat’s numbers are known to be coprime. Shouldn’t this give strategy 7 with

. Moreover, Fermat’s numbers are known to be coprime. Shouldn’t this give strategy 7 with  near

near  ?

?

Comment by Mark Lewko — August 9, 2009 @ 6:21 pm |

Well, yes and no, as I understand it. We do have an explicit sequence whose set of prime factors is very sparse, and so we can indeed find a prime of size about by factoring the first k Fermat numbers. But the sequence grows so fast that the rate-determining step, as it is, isn’t factoring the integers but just writing them down! So computing these factors actually takes way more than k steps. (I could be wrong, though, since I’m a bit fuzzy on what exactly constitutes a “step.”)

by factoring the first k Fermat numbers. But the sequence grows so fast that the rate-determining step, as it is, isn’t factoring the integers but just writing them down! So computing these factors actually takes way more than k steps. (I could be wrong, though, since I’m a bit fuzzy on what exactly constitutes a “step.”)

Still, it’s an interesting result, and I’d be interested in seeing their proof method.

Comment by Harrison — August 9, 2009 @ 7:10 pm |

There at at least two other places in the thread I could put this comment, but here goes:

One can get an improvement in the base of the double logarithm by considering the homogeneous polynomial and then taking

and then taking  to be conjugate algebraic integers of small absolute value. The obvious choice is to take

to be conjugate algebraic integers of small absolute value. The obvious choice is to take  to be the golden ratios, which gives the Lucas numbers

to be the golden ratios, which gives the Lucas numbers  . These can be computed roughly as efficiently as the Fermat numbers thanks to the recurrence

. These can be computed roughly as efficiently as the Fermat numbers thanks to the recurrence  for

for  and they have similar divisibility properties to the Fermat numbers, but grow more slowly. (Still double-exponential, of course.) More precisely, any prime factor of

and they have similar divisibility properties to the Fermat numbers, but grow more slowly. (Still double-exponential, of course.) More precisely, any prime factor of  is congruent to

is congruent to  .

.

This opens up the possibility of looking at other algebraic integers , which corresponds to looking at other sequences satisfying two-term linear recurrences or, if you’re Richard Lipton, taking traces of

, which corresponds to looking at other sequences satisfying two-term linear recurrences or, if you’re Richard Lipton, taking traces of  matrices with integer entries. As I mentioned on his post, the Mahler measure problem and other problems related to finding algebraic integers with all conjugates small in absolute value seem to be relevant here. (Lipton’s ideas about factoring could also be relevant in other ways to this problem.)

matrices with integer entries. As I mentioned on his post, the Mahler measure problem and other problems related to finding algebraic integers with all conjugates small in absolute value seem to be relevant here. (Lipton’s ideas about factoring could also be relevant in other ways to this problem.)

Of course these remarks extend to other cyclotomic polynomials as well.

What happens when you plug conjugate algebraic integers into a quadratic form?

Comment by Qiaochu Yuan — August 9, 2009 @ 6:24 pm |

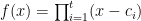

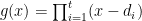

Euclid’s argument is really that if $ latex p_1,…,p_n$ are primes, then none of them can divide . This can be generalized to none of them can divide

. This can be generalized to none of them can divide

, for any subsect

, for any subsect

. By considering prime divisor, similar argument shows that if

. By considering prime divisor, similar argument shows that if  are pairwise coprime, then

are pairwise coprime, then

is prime to all of them. One can also

is prime to all of them. One can also and sign

and sign  (which may be chosen randomly) and let

(which may be chosen randomly) and let

.

. eg

eg  , one has many ways to extend to an infinite sequence of coprime integers (each giving a proof that there are infinitely many primes). Staring with primes, one can also take smallest prime divisor of

, one has many ways to extend to an infinite sequence of coprime integers (each giving a proof that there are infinitely many primes). Staring with primes, one can also take smallest prime divisor of  to get a sequence of distinct primes. To minimize growth, I guess one example is to pick

to get a sequence of distinct primes. To minimize growth, I guess one example is to pick  to be any prime and let

to be any prime and let

to be the smallest prime minimized over all prime divisors of

to be the smallest prime minimized over all prime divisors of

, minimized over all subsets

, minimized over all subsets  , over all signs, and over a bounded set of powers

, over all signs, and over a bounded set of powers  say. I am not sure how small one can bound the growth in this case.

say. I am not sure how small one can bound the growth in this case.

add arbitrary positive powers

So starting with any set of coprime integers

Comment by KokSeng Chua — August 11, 2009 @ 1:07 pm |

Actually, if the Sylvester sequence is square-free, then there must be an exponentially large prime factor of the k^th element a_k, because a_k is double-exponentially large in k, and it is the product of distinct primes if it is square free; it is an elementary result that the product of the first n primes is exponentially growing in n, so the largest prime factor of a_k has to be at least exponentially large.

On the other hand, one is feeding in a double-exponentially large number into the factoring oracle, which is sort of cheating; it would take an exponential amount of time just to enter in the digits of this number (unless one defined it implicitly, for instance as the output of an arithmetic circuit). Even if factoring was available in polynomial time, it would still take exponential time to factor the k^th Sylvester number. The problem is that the sequence grows far too quickly…

Comment by Terence Tao — August 9, 2009 @ 2:27 pm |

We can even do better than the Sylvester sequence, since it’s a result of Euler that the prime factors of the nth Fermat number all have the form

all have the form  , so unconditionally we can find an exponentially large prime by factoring

, so unconditionally we can find an exponentially large prime by factoring  . But of course this sequence is also double-exponential, which I’d argue isn’t just “sort of cheating,” it is cheating! I don’t think it’s possible to escape the double-exponential bind, and as you point out, even if it were, we’d still be cheating by assuming factoring in constant time, which is only a reasonable simplification when we’re factoring polynomially-large numbers.

. But of course this sequence is also double-exponential, which I’d argue isn’t just “sort of cheating,” it is cheating! I don’t think it’s possible to escape the double-exponential bind, and as you point out, even if it were, we’d still be cheating by assuming factoring in constant time, which is only a reasonable simplification when we’re factoring polynomially-large numbers.

Can we find an explicit sequence that’s exponential in some subexponential function which is guaranteed to have large prime factors? I can’t think of a candidate, but maybe someone cleverer can.

which is guaranteed to have large prime factors? I can’t think of a candidate, but maybe someone cleverer can.

Sorry if this double-posts; something happened to the first comment of this nature I wrote and it’s not showing up.

Comment by Harrison — August 9, 2009 @ 3:41 pm |

A slighly

Comment by Joel — August 9, 2009 @ 4:10 pm |

Sorry about my last (not)comment. I can’t erase it.

A slightly improve to that would be to use the cyclotomic polynomials, since every prime which divides but not

but not  must be congruent with 1 modulo

must be congruent with 1 modulo  . Making

. Making  , as the degree of

, as the degree of  is

is  , we have that any prime divisor of

, we have that any prime divisor of  (which is odd and about

(which is odd and about  ) is bigger than

) is bigger than  .

.

Comment by Joel Moreira — August 9, 2009 @ 4:30 pm |

See my comment above. In order for the other cyclotomic polynomials to really be practical one would have to be able to get the ratio to decrease quickly, and there’s no good way to do this other than to take

to decrease quickly, and there’s no good way to do this other than to take  to be the product of the first few primes; even then the gain is too minuscule and we’re still essentially dealing with double-exponential numbers.

to be the product of the first few primes; even then the gain is too minuscule and we’re still essentially dealing with double-exponential numbers.

Comment by Qiaochu Yuan — August 9, 2009 @ 4:35 pm |

And just so it’s clear for anyone else reading, is precisely the Fermat number

is precisely the Fermat number  , and the

, and the  at the end of Joel’s comment should be

at the end of Joel’s comment should be  ; that is, the result Harrison cited is actually slightly stronger than the cyclotomic result.

; that is, the result Harrison cited is actually slightly stronger than the cyclotomic result.

Comment by Qiaochu Yuan — August 9, 2009 @ 4:46 pm |

Well, using Euler’s result that all prime factors of Fermat numbers are at least

are at least  , we at least have the ability to obtain a prime of size

, we at least have the ability to obtain a prime of size  by factoring a single k-digit number, which is comparable to what we can do from trivial methods. So any improvement on this would be somewhat nontrivial, I think.

by factoring a single k-digit number, which is comparable to what we can do from trivial methods. So any improvement on this would be somewhat nontrivial, I think.

Comment by Terence Tao — August 9, 2009 @ 6:05 pm |

I’d like to start the ball rolling with a preliminary analysis of Tim Gowers’ approach (Strategy 3).

Suppose we want to find a prime larger than some given large threshold S, assuming a factoring oracle. Right now, Bertrand’s postulate gives us an O(S) algorithm, and more fancy results in number theory give us for various values of

for various values of  (3/4, 2/3, 0.535, 1/2). I think to begin with, we would be happy if the additive combinatorics approach could give an

(3/4, 2/3, 0.535, 1/2). I think to begin with, we would be happy if the additive combinatorics approach could give an  algorithm for some

algorithm for some  , even if it doesn't beat the strongest number-theoretic based algorithm for now.

, even if it doesn't beat the strongest number-theoretic based algorithm for now.

Let K be the logarithms of the primes between S and 2S. Thus this set consists of about numbers in the interval

numbers in the interval ![[\log S, \log S+\log 2]](https://s0.wp.com/latex.php?latex=%5B%5Clog+S%2C+%5Clog+S%2B%5Clog+2%5D&bg=ffffff&fg=000000&s=0&c=20201002) . It's quite uniformly distributed in a Fourier sense (especially if one assumes the Riemann hypothesis).

. It's quite uniformly distributed in a Fourier sense (especially if one assumes the Riemann hypothesis).

Experience has shown that double sumsets K+K tend to be well behaved almost everywhere, but triple sumsets K+K+K and higher are well behaved everywhere. (Thus, for instance, the odd Goldbach conjecture is solved for all large odd numbers, but the even Goldbach conjecture is only known for ''almost'' all large even numbers, even assuming GRH.) So it seems reasonable to look at the triple sumset K+K+K, which is lying in the interval![[3 \log S, 3 \log S + 3 \log 2]](https://s0.wp.com/latex.php?latex=%5B3+%5Clog+S%2C+3+%5Clog+S+%2B+3+%5Clog+2%5D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

Suppose we are looking to find a non-S-smooth number in time (say). It would suffice to show that the interval

(say). It would suffice to show that the interval ![[T, T + S^{-2.01}]](https://s0.wp.com/latex.php?latex=%5BT%2C+T+%2B+S%5E%7B-2.01%7D%5D&bg=ffffff&fg=000000&s=0&c=20201002) contains an element of K+K+K for some fixed T in

contains an element of K+K+K for some fixed T in ![[3 \log S, 3 \log S + 3 \log 2]](https://s0.wp.com/latex.php?latex=%5B3+%5Clog+S%2C+3+%5Clog+S+%2B+3+%5Clog+2%5D&bg=ffffff&fg=000000&s=0&c=20201002) , e.g.

, e.g.  .

.

On the one hand, this is quite a narrow interval to hit. On the other hand, K+K+K has about triples in it, so probabilistically one has quite a good chance of catching something. But, as always, the difficulty is to get a deterministic result which works even in the worst case scenario.

triples in it, so probabilistically one has quite a good chance of catching something. But, as always, the difficulty is to get a deterministic result which works even in the worst case scenario.

Presumably the thing to do now is fire up the circle method and write things in terms of the Fourier coefficients of K, which assuming RH should be quite small (of size about , ignoring logs). The fact that

, ignoring logs). The fact that  is not as small as

is not as small as  is a little worrying, though. (And things seem to get progressively worse as the number of sums increases.) But perhaps there is a way to tweak the method to do better. (For instance, we don't need ''all'' the factors of the number to be between S and 2S; just having one of them like that would be enough.)

is a little worrying, though. (And things seem to get progressively worse as the number of sums increases.) But perhaps there is a way to tweak the method to do better. (For instance, we don't need ''all'' the factors of the number to be between S and 2S; just having one of them like that would be enough.)

Comment by Terence Tao — August 9, 2009 @ 4:27 am |

Hmm, the tininess of the interval [T,T+S^{-2.01}] is quite discouraging. Even if one considers the larger set K + L, where L is the log-integers (and which are very highly uniformly distributed), one can still miss this interval entirely. Undoing the logarithm, the point here is that an interval of the form [N, N+S^{0.99}] could manage, by a perverse conspiracy, to miss all multiples of p for every prime p between S and 2S. (Indeed, by the Chinese remainder theorem, we know that there exists some huge N – exponentially large perhaps – for which this is the case. Also, N=1 would also work :))

But perhaps we could show by more number-theoretic means that this would not happen for polynomially-sized N. For instance, suppose somehow that [S^2, S^2+S^{0.99}] managed to avoid all multiples of primes p between S and 2S. This would mean that the residues -S^2 \hbox{ mod } p managed to avoid the large interval [0,S^{0.99}] for all such p. If one had an equidistribution result for these residues then one could perhaps get a contradiction, but I don’t see how to get this – I know of few tools for correlating residues in one modulus with residues in another (other than quadratic reciprocity, which doesn’t seem to be applicable here.)

Comment by Terence Tao — August 9, 2009 @ 2:39 pm |

Hmm, the tininess of the interval [T,T+S^{-2.01}] is quite discouraging. Even if one considers the larger set K + L, where L is the log-integers (and which are very highly uniformly distributed), one can still miss this interval entirely. Undoing the logarithm, the point here is that an interval of the form [N, N+S^{0.99}] could manage, by a perverse conspiracy, to miss all multiples of p for every prime p between S and 2S. (Indeed, by the Chinese remainder theorem, we know that there exists some huge N – exponentially large perhaps – for which this is the case. Also, N=1 would also work :))

It might be instructive to state this as a negative result. I’ll take a stab at it, though I’m probably not the best person to do so, but it’ll at least be instructive to me. Stated as an obstruction, this says that even augmented with “Additive Combinatorics Lite” (in this case, Fourier analysis), sieve-theoretic methods don’t suffice to produce a small interval with non-smooth numbers. Am I close?

Comment by Harrison — August 9, 2009 @ 11:23 pm |

Yes, I think this is the case, although the obstruction is not really formalised yet.

Here is a back-of-the-envelope Fourier calculation which looks a bit discouraging. Suppose one wants to show that the interval![[2N^3, 2N^3+N^{0.99}]](https://s0.wp.com/latex.php?latex=%5B2N%5E3%2C+2N%5E3%2BN%5E%7B0.99%7D%5D&bg=ffffff&fg=000000&s=0&c=20201002) contains an element of the triple product set

contains an element of the triple product set ![[N,2N] \cdot [N,2N] \cdot [N,2N]](https://s0.wp.com/latex.php?latex=%5BN%2C2N%5D+%5Ccdot+%5BN%2C2N%5D+%5Ccdot+%5BN%2C2N%5D&bg=ffffff&fg=000000&s=0&c=20201002) . If we let

. If we let  be counting measure on the log-integers

be counting measure on the log-integers  , we are asking that

, we are asking that  gives a non-zero weight to the the interval

gives a non-zero weight to the the interval ![[\log 2N^3, \log 2N^3 + O( N^{-2.01} ) ]](https://s0.wp.com/latex.php?latex=%5B%5Clog+2N%5E3%2C+%5Clog+2N%5E3+%2B+O%28+N%5E%7B-2.01%7D+%29+%5D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

We express this Fourier-analytically, basically as a Fourier integral of over an interval

over an interval  , multiplied by the normalising factor of

, multiplied by the normalising factor of  .

.

The main term will be coming from the low frequencies , where

, where  is about N; this gives the main term of about

is about N; this gives the main term of about  , which is what one expects.

, which is what one expects.

What about the error terms? Well, the Dirac spikes of are distance about 1/N apart. For $N \ll |\xi| \ll N^{2.01}$, there’s no particular reason for any coherence in the Fourier sum in

are distance about 1/N apart. For $N \ll |\xi| \ll N^{2.01}$, there’s no particular reason for any coherence in the Fourier sum in  , and so I would expect the sum to behave randomly, i.e.

, and so I would expect the sum to behave randomly, i.e.  in this region. (In fact, RH basically would give this to us). This leads one to an error term of

in this region. (In fact, RH basically would give this to us). This leads one to an error term of  , which is too large compared to the main term.

, which is too large compared to the main term.

The situation does not seem to improve with various tweaking of parameters, though maybe I’m missing something.

Comment by Terence Tao — August 10, 2009 @ 12:19 am |

[…] discussion threads polymath4, devoted to finding deterministically large prime numbers, is on its way on the polymath […]

Pingback by Polymath4 – Finding Primes Deterministically – is On Its Way « Combinatorics and more — August 9, 2009 @ 6:58 am |

Google gives it secondhand that there’s no known unconditional, deterministic polynomial-time algorithm for squarefreeness (although the cited paper is 15 years old.)

My hunch is that testing squarefreeness is hard, although of course it’s trivial with a factoring oracle, so it’s unlikely to be NP-complete or anything. If you try to get much more information than whether n is squarefree, by the way (for instance, if you want to find the exponents of the primes that divide n), you start to get reductions from problems that are almost certainly intractable.

I’m also not convinced that a polynomial-time, or even random polynomial-time, reduction from factoring to squarefreeness exists, however, although the following heuristic argument hints at what such a reduction would probably look like. Since the squarefree numbers have positive density, testing a number of any size for squarefreeness essentially provides one bit of information; however, writing down the prime factorization of a k-digit integer requires O(log k) bits in general, so a reduction would probably have to make O(k) calls to SQUAREFREE.

Squarefreeness also seems to have some connections to the Euclidean algorithm, which essentially derives from the fact that squarefree numbers are characterized by the property that in any factorization n = a*b, a and b are coprime. (My first stab at trying to reduce factoring to squarefreeness was along the following lines: if n is squarefree, then pick a number N >> n that’s also squarefree [this can be done randomly w.h.p.], and test N*n for squarefreeness to find common factors. But of course the last step can be done via the Euclidean algorithm just as well.) It’d be interesting to see if we can make those connections more rigorous.

Comment by Harrison — August 9, 2009 @ 8:46 am |

Warning: This post is crazy long, way longer than I expected, as I had a few realizations that led to more realizations as I wrote it. Apologies.

Also on the squarefree front, I’d like to toss out another toy problem, which is related (though not really comparable) to the initial problem of whether we can find consecutive squarefree integers.

Problem 5a, Boring Preliminary Version. Show that there exist arbitrarily long arithmetic progressions of squarefree integers.

What’s that, you say? The squarefree integers have positive density — really big positive density! — and so by Szemeredi’s theorem, there are arbitrarily long APs? Well, yeah, but that’s boring. The meta-question we’re really after is how we can take advantage of the structure of the squarefree numbers, and Szemeredi’s theorem throws away all of that structure. So let’s tie one hand behind our collective back:

Problem 5a (Open). Without using Szemeredi’s theorem or some moral equivalent, show that there exist arbitrarily long APs of squarefree integers.

This is much more like what we really want. It’s worth noting that van der Waerden’s theorem isn’t sufficient to solve 5a, since we can embed with its additive structure into the squareful numbers (consider the sequence 4, 8, 12, 16, …) It’s also probably useful to note that the squarefree numbers are a good example of a set which is neither fully pseudorandom nor highly structured; for instance, there are no quadruples of consecutive squarefree numbers (since one of them would be divisible by 4), which isn’t true for random sets of positive density; but neither is there an infinite AP, which is the easiest structure to take advantage of. On the pseudorandom hand, the distribution of squarefree numbers is what we’d expect from a random sequence of density

with its additive structure into the squareful numbers (consider the sequence 4, 8, 12, 16, …) It’s also probably useful to note that the squarefree numbers are a good example of a set which is neither fully pseudorandom nor highly structured; for instance, there are no quadruples of consecutive squarefree numbers (since one of them would be divisible by 4), which isn’t true for random sets of positive density; but neither is there an infinite AP, which is the easiest structure to take advantage of. On the pseudorandom hand, the distribution of squarefree numbers is what we’d expect from a random sequence of density  , with the error term bounded by

, with the error term bounded by  . On the structured hand, assuming the Riemann hypothesis, we can cut the exponent in the error term to just under 1/3.

. On the structured hand, assuming the Riemann hypothesis, we can cut the exponent in the error term to just under 1/3.

I’d also like to mention two variations of this problem, the first of which is easier and the last of which is probably harder.

Problem 5a, Variation 1 (Easy). Show (without using Roth’s theorem or equivalent) that there are infinitely many triples of squarefree numbers in arithmetic progression; equivalently, show that there are infinitely many solutions in squarefree numbers to x + y = 2z.

Note that there aren’t quite enough squarefrees for a union-bound proof that there are infinitely many consecutive squarefree triples; it’s probably true that there are infinitely many, and this might not even be that hard to prove, but I can find neither a proof that there are infinitely many squarefree triples nor any indication of the problem’s status.

However, the problem as stated above has an easy solution which I just thought of. Note that 3, 5, 7 is a 3-term AP of squarefree numbers. Now let p > 7 be prime; then 3p, 5p, 7p is another squarefree AP. We can turn any k-term AP of squarefrees into an infinite family of APs in the same way. Unfortunately this has no direct bearing on the initial toy problem (to find consecutive squarefree numbers), since the symmetry we’re exploiting here doesn’t preserve consecutiveness.

Problem 5a, Variation 2 (Easy). Explicitly exhibit a polynomial-time computable family of 3-term APs of squarefree numbers. (i.e., given an integer n, construct in polynomial time a sequence x, y, z of squarefree numbers with n < x < y < z and x + z = 2y.)

Actually, essentially the above construction works here, too — just replace p by 2*11*…*k, where k is a prime about log n. So I'll introduce a new problem to try to get rid of the freedom to multiply through by a large enough prime:

Problem 5b, Variations 0-2 (Open). Do Problem 5a and its variations hold with the additional constraint that the squarefree integers in the AP are mutually relatively prime?

These questions may be closest to the original toy problem 5; I could talk about them some, but this post is too long already, so I won’t.

Comment by Harrison — August 9, 2009 @ 6:25 pm |

Well, one can use the W-trick to boost the density of square-free numbers to as close to 1 as desired, which should solve Problem 5a. More precisely, if W is the product of all the primes less than w, then the density of numbers n such that Wn+1 is square-free is asymptotically , which converges to 1 as

, which converges to 1 as  . To get progressions of length 100, one just has to take w large enough that the density of n with Wn+1 square-free is over 99%, in which case one gets progressions from the union bound.

. To get progressions of length 100, one just has to take w large enough that the density of n with Wn+1 square-free is over 99%, in which case one gets progressions from the union bound.

Getting an explicit, mutually coprime, progression seems harder though.

Comment by Terence Tao — August 9, 2009 @ 8:51 pm |

The solution to Problem 5a with pairwise coprime terms is an immediate consequence of results of L. Mirsky on patterns of squarefree integers. See

L. Mirsky, Arithmetical pattern problems relating to divisibility by r-th powers, Proc. London Math. Soc. (2), 50 (1949), 497-508.

L. Mirsky, Note on an asymptotic formula connected with r-free integers, Quart. J. Math., Oxford Ser., 18 (1947), 178-182″.

Mirsky identifies those tuples $k_1,\dots,k_s$ of integers for which $k_1 + n,\ldots,k_s + n$ are simultaneously squarefree (or r-free) for infinitely many $n$ (briefly, the obvious necessary condition is actually sufficient), and gives an asymptotic estimate for the number of such $n \leq x$. His arguments are elementary.

To produce infinitely many arithmetic progressions of $s$ terms consisting of pairwise coprime squarefrees using the result of Mirsky, let $a$ be the square of the product of the primes $p \sqrt{s}$ this is clear, since there aren’t enough of them, while they are all congruent to $1$ modulo $p^2$ for any prime $p \leq \sqrt{s} < s$ by the definition of $a$. Furthermore they are pairwise coprime, for if some prime $q$ divides two of them, it divides their difference, which is of the form $(n – m)a$ with $0 \leq m < n \leq s – 1$. Then $q$ is a prime less than $s$ by the definition of $a$, but this is impossible since all the $k_j = 1 + ja$ are congruent to $1$ modulo such primes. Now it follows that $1 + n,1 + a + n,1 + 2a + n,\dots,1 + (s-1)a + n$ are coprime and simultaneously squarefree infinitely often by Mirsky's results.

Comment by Anon — August 10, 2009 @ 8:20 pm |

The existence of arbitrarily long arithmetic progressions of pairwise coprime squarefrees is an immediate consequence of results of L. Mirsky. See

L. Mirsky, Arithmetical pattern problems relating to divisibility by $r$th powers, Proc. London Math. Soc. (2), 50 (1949), 497-508.

L. Mirsky, Note on an asymptotic formula connected with $r$-free integers, Quart. J. Math., Oxford Ser., 18 (1947), 178-182.

Comment by Anon — August 10, 2009 @ 9:04 pm |

According to Granville (“ABC Allows Us to Count Squarefrees”, p. 992), Hooley proved unconditionnally that there exists infinitely many consecutive squarefree triples. The point is to find squarefree values of the polynomial (n+1)(n+2)(n+3).

In fact the result of Hooley is more general and deals with squarefree values of f(n), where f is a degree 3 polynomial.

There is also a density statement. But I could not find a reference for a bound on the error term.

You can refer to the following articles for more information:

A. Granville, “ABC Allows Us to Count Squarefrees”,

Click to access polysq3.pdf

F. Pappalardi, “A survey on k-freeness”,

Click to access allahabad2003.pdf

Comment by François Brunault — August 11, 2009 @ 6:07 pm |

The integers 1,2,3 are squarefree, so by Mirsky’s result from 1947, there are infinitely many squarefree triples n+1,n+2,n+3. But I reckon that this case must have been known before Mirsky. The error bound of Mirsky is O(x^{2/3 + \epsilon}). Possibly this can be improved, since Heath-Brown obtained a better error bound for pairs n,n+1.

Comment by Anon — August 11, 2009 @ 8:30 pm |

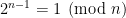

6. Regarding Strategy 1, I’d like just to mention that there are some candidates for sequences which contain only primes on Sloane’s encyclopedia. Of course none of them is proved to contain only primes yet, but maybe one of them is actually tracatable by (or may inspire some) knowledgeable readers here.

A first (quite dense) sequence is A065296: prove that if there is only one solution in![[0,n-1]](https://s0.wp.com/latex.php?latex=%5B0%2Cn-1%5D&bg=ffffff&fg=000000&s=0&c=20201002) to

to  (namely

(namely  ), then

), then  is prime.

is prime.

Another (quite sparse) is A092571: explain which number of the form is prime (where

is prime (where  is the n-th repunit), the actual sequence being 61, 661, 6661, 6666666661, 666666666666666661, 666666666666666666661, 6666666666666666666661….

is the n-th repunit), the actual sequence being 61, 661, 6661, 6666666661, 666666666666666661, 666666666666666666661, 6666666666666666666661….

Comment by Thomas Sauvaget — August 9, 2009 @ 8:50 am |

Responding to Terry’s comment 3, I’d like to mention yet another toy problem. This one is designed to be an easier goal for the additive-combinatorial approach, but one that might conceivably help with the harder one. Recall that that approach is to take K to be the set of logarithms of all primes in some interval, and to try to prove that the iterated sumsets of K must become very dense. It seems to me that a good way of getting a bit of intuition about this problem would be to look instead at the set L of logarithms of all integers in some interval (whether prime or composite) and think about the same question for L.

Suppose that one could prove that the iterated sumsets of L eventually became very dense. Then one would have shown that in every small interval![[n,n+m]](https://s0.wp.com/latex.php?latex=%5Bn%2Cn%2Bm%5D&bg=ffffff&fg=000000&s=0&c=20201002) there must be a number with a factor (but not necessarily a prime factor) in an interval

there must be a number with a factor (but not necessarily a prime factor) in an interval ![[u,u+v]](https://s0.wp.com/latex.php?latex=%5Bu%2Cu%2Bv%5D&bg=ffffff&fg=000000&s=0&c=20201002) . Here we are hoping for

. Here we are hoping for  to be very small, and for

to be very small, and for  not to be too small compared with

not to be too small compared with  (something like

(something like  seems about the best one can hope for, but initially one might settle for less than this). But

seems about the best one can hope for, but initially one might settle for less than this). But  isn’t too tiny compared with

isn’t too tiny compared with  — it might be

— it might be  , or

, or  , say.

, say.

I’m pretty sure it’s not trivial that there must be a number between and

and  that has a factor in some given interval

that has a factor in some given interval ![[u,v]](https://s0.wp.com/latex.php?latex=%5Bu%2Cv%5D&bg=ffffff&fg=000000&s=0&c=20201002) such as the above, but I haven’t checked. If it turns out to be obvious, then this is not after all an interesting problem. (As it is, a positive solution wouldn’t be all that interesting, except as an initial guide to how to prove the result when we replace L by K.)

such as the above, but I haven’t checked. If it turns out to be obvious, then this is not after all an interesting problem. (As it is, a positive solution wouldn’t be all that interesting, except as an initial guide to how to prove the result when we replace L by K.)

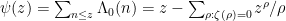

What I quite like about this problem is that the Fourier transform of the set L is a little piece of the Riemann zeta function. Indeed, the value at of the transform of the measure that sticks an atom of weight 1 at every point of L is

of the transform of the measure that sticks an atom of weight 1 at every point of L is  . This suggests to me that some of the techniques that have been used for analysing Dirichlet series could be used here.

. This suggests to me that some of the techniques that have been used for analysing Dirichlet series could be used here.

But there is also the possibility of using insights connected with sum-product problems. For instance, is a concave function and the interval

is a concave function and the interval ![[u,u+v]](https://s0.wp.com/latex.php?latex=%5Bu%2Cu%2Bv%5D&bg=ffffff&fg=000000&s=0&c=20201002) has very good additive properties. It follows from results of Elekes (and others? my memory is failing me here) that

has very good additive properties. It follows from results of Elekes (and others? my memory is failing me here) that  can’t have good additive properties. By that I mean that the sumset

can’t have good additive properties. By that I mean that the sumset  must be large. Something like this probably applies to

must be large. Something like this probably applies to  as well, but after a few more sums, since, at least if

as well, but after a few more sums, since, at least if  is linear in

is linear in  , the techniques that go into Vinogradov’s three-primes theorem will tell us that the set of primes in

, the techniques that go into Vinogradov’s three-primes theorem will tell us that the set of primes in ![[u,u+v]](https://s0.wp.com/latex.php?latex=%5Bu%2Cu%2Bv%5D&bg=ffffff&fg=000000&s=0&c=20201002) will, after a bounded number of sums, become pretty evenly distributed in an additively structured set.

will, after a bounded number of sums, become pretty evenly distributed in an additively structured set.

The remarks in the above paragraph aren’t directly relevant, but they are meant to be suggestive: if you take an arithmetic progression and take logs of everything, then you should have something that’s so unlike an arithmetic progression that when you take iterated sumsets it becomes far less atomic. It might even be that such a result followed from something much more general, where was replaced by an arbitrary convex function. (Actually, not quite arbitrary, but perhaps something that has a gradient that decreases reasonably steadily.)

was replaced by an arbitrary convex function. (Actually, not quite arbitrary, but perhaps something that has a gradient that decreases reasonably steadily.)

Comment by gowers — August 9, 2009 @ 2:29 pm |

This is not quite in the spirit of Tim’s approach, but I can get a non-trivial result on L+L using the Weyl bound for the Gauss circle problem, or more precisely the variant of this circle problem for the hyperbola (essentially the Dirichlet divisor problem).

More precisely, let’s look at the product set![[S,2S] \cdot [S,2S] \subset [S^2, 4S^2]](https://s0.wp.com/latex.php?latex=%5BS%2C2S%5D+%5Ccdot+%5BS%2C2S%5D+%5Csubset+%5BS%5E2%2C+4S%5E2%5D&bg=ffffff&fg=000000&s=0&c=20201002) in the middle of the interval

in the middle of the interval ![[S^2,4S^2]](https://s0.wp.com/latex.php?latex=%5BS%5E2%2C4S%5E2%5D&bg=ffffff&fg=000000&s=0&c=20201002) , say near

, say near  (this is like considering L+L where L are the log-integers restricted to

(this is like considering L+L where L are the log-integers restricted to ![[\log S, \log S + \log 2]](https://s0.wp.com/latex.php?latex=%5B%5Clog+S%2C+%5Clog+S+%2B+%5Clog+2%5D&bg=ffffff&fg=000000&s=0&c=20201002) ). It’s trivial that any interval of length S near

). It’s trivial that any interval of length S near  will meet

will meet ![[S,2S] \cdot [S,2S]](https://s0.wp.com/latex.php?latex=%5BS%2C2S%5D+%5Ccdot+%5BS%2C2S%5D&bg=ffffff&fg=000000&s=0&c=20201002) . I claim though that the same is true for intervals of size about

. I claim though that the same is true for intervals of size about  . The reason is that the number of products of the form

. The reason is that the number of products of the form ![[S,2S] \cdot [S,2S]](https://s0.wp.com/latex.php?latex=%5BS%2C2S%5D+%5Ccdot+%5BS%2C2S%5D&bg=ffffff&fg=000000&s=0&c=20201002) less than a given number x is basically the number of lattice points in the square

less than a given number x is basically the number of lattice points in the square ![[S,2S] \times [S,2S]](https://s0.wp.com/latex.php?latex=%5BS%2C2S%5D+%5Ctimes+%5BS%2C2S%5D&bg=ffffff&fg=000000&s=0&c=20201002) intersect the hyperbolic region

intersect the hyperbolic region  . The Weyl technology for the Gauss circle problem (Poisson summation, etc.) gives an asymptotic for this with an error of

. The Weyl technology for the Gauss circle problem (Poisson summation, etc.) gives an asymptotic for this with an error of  , which implies in particular that this count must increase whenever x increases by more than

, which implies in particular that this count must increase whenever x increases by more than  . So every interval of this length must contain at least one number which factors as a product of two numbers in [S,2S].

. So every interval of this length must contain at least one number which factors as a product of two numbers in [S,2S].

Presumably some of the various small improvements to the Weyl bound for the circle problem over the years can be transferred to the hyperbola, allowing one to reduce the 2/3 exponent slightly.

Unfortunately the asymptotics become much much worse if we restrict the numbers in [S,2S] to be prime, so I doubt this gives anything particularly non-trivial for the original primality problem. Also the error term in these lattice point problems is never going to be better than , so we once again butt our heads against this

, so we once again butt our heads against this  barrier.

barrier.

Comment by Terence Tao — August 9, 2009 @ 7:20 pm |

I’ve just had another thought, and in the Polymath spirit I’m going to express it before thinking at all seriously about whether it has a chance of being true. (Or rather, later in this comment I’ll think about that in real time, so to speak, and quite likely not manage to resolve the problem.)

The question is this. I’ll repeat the set-up from my previous comment (number 7). Let be an integer and let

be an integer and let  be a power of

be a power of  . Let

. Let  be an integer that’s quite a bit smaller than

be an integer that’s quite a bit smaller than  — something like

— something like  (a function chosen because the number of prime factors of a typical integer is

(a function chosen because the number of prime factors of a typical integer is  so one might expect their typical size to be about

so one might expect their typical size to be about  ). One thing we’d very much like to be able to do is show that some number in the interval

). One thing we’d very much like to be able to do is show that some number in the interval ![[n,n+m]](https://s0.wp.com/latex.php?latex=%5Bn%2Cn%2Bm%5D&bg=ffffff&fg=000000&s=0&c=20201002) has a prime factor of size roughly

has a prime factor of size roughly  . Let’s take that to mean that it has a prime factor between

. Let’s take that to mean that it has a prime factor between  and

and  , where

, where  , though I’m open to other choices for

, though I’m open to other choices for  . In the previous comment I suggested going for any factor in that interval, which would be a strictly easier problem. Now it occurs to me that we could be daring and go for a strictly harder problem: perhaps the conclusion is true for an arbitrary subset of

. In the previous comment I suggested going for any factor in that interval, which would be a strictly easier problem. Now it occurs to me that we could be daring and go for a strictly harder problem: perhaps the conclusion is true for an arbitrary subset of ![[u,u+v]](https://s0.wp.com/latex.php?latex=%5Bu%2Cu%2Bv%5D&bg=ffffff&fg=000000&s=0&c=20201002) of density

of density  . That is, if

. That is, if ![B\subset [u,u+v]](https://s0.wp.com/latex.php?latex=B%5Csubset+%5Bu%2Cu%2Bv%5D&bg=ffffff&fg=000000&s=0&c=20201002) is a set of size at least

is a set of size at least  , then perhaps there is guaranteed to be an integer in

, then perhaps there is guaranteed to be an integer in ![[n,n+m]](https://s0.wp.com/latex.php?latex=%5Bn%2Cn%2Bm%5D&bg=ffffff&fg=000000&s=0&c=20201002) with a factor in

with a factor in  .

.

A first check would obviously be to take to be a subinterval of

to be a subinterval of ![[u,u+v]](https://s0.wp.com/latex.php?latex=%5Bu%2Cu%2Bv%5D&bg=ffffff&fg=000000&s=0&c=20201002) of that size. Let

of that size. Let  . We’ll be in trouble if it is not the case that

. We’ll be in trouble if it is not the case that  , since then the sets of powers of elements of

, since then the sets of powers of elements of  will leave huge gaps. So we find ourselves wanting to know whether

will leave huge gaps. So we find ourselves wanting to know whether  could be comparable to

could be comparable to  . But

. But  , so

, so  is comparable to

is comparable to  , which means we have no chance here.

, which means we have no chance here.

The obvious next question is how big would have to be for us to have a chance of proving something so general. The above analysis tells us that we need

would have to be for us to have a chance of proving something so general. The above analysis tells us that we need  to be at least

to be at least  for a power

for a power  that's around

that's around  . But that's actually pretty good news, since the number of digits of

. But that's actually pretty good news, since the number of digits of  is about

is about  .

.

So here's a first refinement of the insane idea above: perhaps if you pick an arbitrary subset of

of ![[u,v]](https://s0.wp.com/latex.php?latex=%5Bu%2Cv%5D&bg=ffffff&fg=000000&s=0&c=20201002) of density at least

of density at least  , and

, and  is significantly bigger than

is significantly bigger than  , then some number in the interval

, then some number in the interval ![[n,n+(\log n)^{100}]](https://s0.wp.com/latex.php?latex=%5Bn%2Cn%2B%28%5Clog+n%29%5E%7B100%7D%5D&bg=ffffff&fg=000000&s=0&c=20201002) must have a factor in

must have a factor in  .

.

What I like about this (unless it is trivially false) is that it is very general and therefore could be easier to tackle than something about the primes. It suggests a strategy, which is first to tackle the case where![B=[u,v]](https://s0.wp.com/latex.php?latex=B%3D%5Bu%2Cv%5D&bg=ffffff&fg=000000&s=0&c=20201002) and then to look at arbitrary subsets of reasonable density.

and then to look at arbitrary subsets of reasonable density.

Comment by gowers — August 9, 2009 @ 4:41 pm |

To make this conjecture sound more plausible, let me give a heuristic argument for it.

1. The interval![J=[u,v]](https://s0.wp.com/latex.php?latex=J%3D%5Bu%2Cv%5D&bg=ffffff&fg=000000&s=0&c=20201002) is a highly “additive set” and therefore should spread out very evenly from a multiplicative point of view. (Equivalently,

is a highly “additive set” and therefore should spread out very evenly from a multiplicative point of view. (Equivalently,  is very far from additive.) So the conjecture seems pretty likely in the case

is very far from additive.) So the conjecture seems pretty likely in the case  . (However, serious thought needs to go into just how dense we think we can prove that iterated sumset will be. One possibility here might be to use the fact that the derivative of

. (However, serious thought needs to go into just how dense we think we can prove that iterated sumset will be. One possibility here might be to use the fact that the derivative of  decreases very smoothly to prove that

decreases very smoothly to prove that  has lots of parts that resemble arithmetic progressions of all sorts of different common differences. So the sumset should be beautifully spread out. I wonder if this is already a point where somebody ought to go off, do some private calculations, and report back. I could give it a go, but as ever I would like to ask others what they think first.)

has lots of parts that resemble arithmetic progressions of all sorts of different common differences. So the sumset should be beautifully spread out. I wonder if this is already a point where somebody ought to go off, do some private calculations, and report back. I could give it a go, but as ever I would like to ask others what they think first.)

2. If we take a subset of

of  of density

of density  , then we would expect any gaps in

, then we would expect any gaps in  to be filled once

to be filled once  got to around

got to around  . For instance, if you take a subset of

. For instance, if you take a subset of  of density

of density  , then after about

, then after about  sums it starts to be pretty evenly spread around the whole of

sums it starts to be pretty evenly spread around the whole of  . Obviously a subset of

. Obviously a subset of  isn’t quite so simple, but something similar ought to happen “near the middle”.

isn’t quite so simple, but something similar ought to happen “near the middle”.

Comment by gowers — August 9, 2009 @ 5:34 pm |

My worry is that if one discretises at the correct scale, that J is actually quite sparse and so it will take a long time to achieve the desired mixing.

More precisely, in the logarithmic scale, the interval [n,n+m] has length about . So one would need to discretise at roughly the scale 1/n in order to make things work. Meanwhile, the interval [u,v] has length about 1, so when one discretises is sort of like an arithmetic progression of length n. But J only fills up about u of this progression. u is like

. So one would need to discretise at roughly the scale 1/n in order to make things work. Meanwhile, the interval [u,v] has length about 1, so when one discretises is sort of like an arithmetic progression of length n. But J only fills up about u of this progression. u is like  , so it’s rather sparse. In particular, it’s smaller than any power of n, which tends to be a warning sign for Fourier-analytic methods – Plancherel’s theorem, in particular, permits quite a large number of very large Fourier coefficients, which is not promising. Admittedly, one is taking a large iterated sumset of J, but my initial guess is that the parameters are not favorable. It’s worth doing a back-of-the-envelope calculation to confirm this, of course. (Note also that we don’t necessarily have to shoot for m as small as polylog(n) to declare progress; m could be as large as

, so it’s rather sparse. In particular, it’s smaller than any power of n, which tends to be a warning sign for Fourier-analytic methods – Plancherel’s theorem, in particular, permits quite a large number of very large Fourier coefficients, which is not promising. Admittedly, one is taking a large iterated sumset of J, but my initial guess is that the parameters are not favorable. It’s worth doing a back-of-the-envelope calculation to confirm this, of course. (Note also that we don’t necessarily have to shoot for m as small as polylog(n) to declare progress; m could be as large as  (say) and we would still have something beating the trivial bound.) But even with this relaxation of the problem, J looks dangerously sparse to me…

(say) and we would still have something beating the trivial bound.) But even with this relaxation of the problem, J looks dangerously sparse to me…

Comment by Terence Tao — August 10, 2009 @ 12:08 am |

K. Ford has a big paper in Annals (available at http://front.math.ucdavis.edu/0401.5223 ) which gives information about the asymptotic behavior of the number of integers up to with at least one divisor in an interval

with at least one divisor in an interval ![[y,z]](https://s0.wp.com/latex.php?latex=%5By%2Cz%5D&bg=ffffff&fg=000000&s=0&c=20201002) , more or less for arbitrary

, more or less for arbitrary  . He does state a result (Th. 2, page 6 of the arXiv PDF) with

. He does state a result (Th. 2, page 6 of the arXiv PDF) with  restricted to an interval

restricted to an interval ![[x-\Delta,x]](https://s0.wp.com/latex.php?latex=%5Bx-%5CDelta%2Cx%5D&bg=ffffff&fg=000000&s=0&c=20201002) , but

, but  must be quite large (something like

must be quite large (something like  in the setting of the previous comment); he does say that the range of

in the setting of the previous comment); he does say that the range of  can be improved, but to get a power of log seems hard. However, the techniques he develops could be useful.

can be improved, but to get a power of log seems hard. However, the techniques he develops could be useful.

Comment by Emmanuel Kowalski — August 10, 2009 @ 1:29 am |

In a similar spirit to the oracle example of “generic primes” which elude deterministic polynomial time

algorithms (posted on the wiki), I think we have to be careful here too. I was thinking about the

following example. Consider the interval [n,n+m] where n=2^k is a power of 2. This way, all the

elements in this interval are “simple”, ie have Kolmogorov complexity at most log log n + log m.

Now consider an interval U=[u,2u] where say u=n^(1/t). So in Tim’s scenario we had t about

sqrt(log n). Take as the subset B of U the primes in U which have Kolmogorov complexity at least

log(u)/2log log(u) even relative to the set U. This is still a set with density

at least 1/2log(u). Suppose that an element v of B divided an element x in [n,n+m]. Then we could

describe v given the set U by specifying x, and with log t more bits identify v in the list of at most t

elements of U which divide x. Thus we must have log(u)/2log log(u) <= log log n + log m + log t, or

in other words roughly n <= (3mt log n)^t. This seems to rule out having t like sqrt(log n) and m

polylogarithmic in n.

Comment by Troy — August 10, 2009 @ 10:56 pm |

I found this reference to a paper on the discussion thread.

Proceedings of the London Mathematical Society 83, (2001), 532–562.

R. C. Baker, G. Harman and J. Pintz, The difference between consecutive primes, II

The authors sharpen a result of Baker and Harman (1995), showing that [x, x + x^{0.525}] contains prime numbers for large x.

It looks like using this we can find a prime in O(10^k)^{0.525} time.

Comment by kristalcantwell — August 9, 2009 @ 5:03 pm |

Tim’s ideas on sumsets of logs got me to thinking about a related, but different approach to these sorts of “spacing problems”: say we want to show that no interval [n, n + log n] contains only (log n)^100 – smooth numbers. If such strange intervals exist, then perhaps one can show that there are loads of distinct primes p in [(log n)^10, (log n)^100] that each divide some number in this interval (we would need to exclude the possibility that the interval contains numbers divisible by primes to high powers to do this). Let’s assume this is true. And let’s say we have something like Omega( log n/ loglog n) of these primes dividing each number in our interval [n, n + log n], resulting in something like (log n)^{2 – o(1)} primes in total dividing numbers in this interval.

Now, given such a collection of primes, say they are p_1, …, p_k, where perhaps k ~ (log n)^{2-o(1)} or something, it is reasonable to expect that the set of sums

S := {c_1/p_1 + … + c_k/p_k : c_i = 0, 1, or -1}

is fairly dense in some not-too-short interval centered about 0 — so dense that we would expect that if q is any prime at all in [2,(log n)^100], then we can approximate

1/q = c_1/p_1 + … + c_k/p_k + E,

to an error E satisfying |E| < 1/n (log n)^100, say. If so, then multiplying through by n we would get

n/q = c_1 n/p_1 + … + c_k n/p_k + O( (log n)^{-100}).

But because each p_i divides a number in [n, n + log n], we would have that the RHS comes within something like O(k (log n)/max_i p_i) = O( (log n)^{-7}) of an integer. So, looking at the LHS we have q divides some number in the interval [n-q (log n)^{-7}, n + q (log n)^{-7}]. But this can't be possible for every prime q in [ (log n)^10, (log n)^100] by simple upper bounds on the number of prime divisors of numbers in short intervals.

Comment by Anonymous — August 9, 2009 @ 5:48 pm |

This probably counts as cheating, but one can get a little bit of traction if one assumes the ABC conjecture.

Consider the interval![[n, n+\log n/2]](https://s0.wp.com/latex.php?latex=%5Bn%2C+n%2B%5Clog+n%2F2%5D&bg=ffffff&fg=000000&s=0&c=20201002) where n is very smooth, say a power of 2, and suppose that all numbers were S-smooth. Assuming ABC, this shows that the radical (the product of all the prime factors) of any other element of

where n is very smooth, say a power of 2, and suppose that all numbers were S-smooth. Assuming ABC, this shows that the radical (the product of all the prime factors) of any other element of ![[n, n+\log n/2]](https://s0.wp.com/latex.php?latex=%5Bn%2C+n%2B%5Clog+n%2F2%5D&bg=ffffff&fg=000000&s=0&c=20201002) is

is  for any fixed

for any fixed  . In particular, all such numbers contain

. In particular, all such numbers contain  prime factors that are greater than

prime factors that are greater than  . But no two elements in

. But no two elements in ![[n, n+\log n/2]](https://s0.wp.com/latex.php?latex=%5Bn%2C+n%2B%5Clog+n%2F2%5D&bg=ffffff&fg=000000&s=0&c=20201002) can share a common prime factor greater than

can share a common prime factor greater than  , so we must have

, so we must have  prime factors less than S. But this is only compatible with the prime number theorem if

prime factors less than S. But this is only compatible with the prime number theorem if  . Thus we must have an element of

. Thus we must have an element of ![[n, n+\log n/2]](https://s0.wp.com/latex.php?latex=%5Bn%2C+n%2B%5Clog+n%2F2%5D&bg=ffffff&fg=000000&s=0&c=20201002) which has a prime factor

which has a prime factor  . So, using ABC, one can match the RH bound (which gives us a prime of size at least

. So, using ABC, one can match the RH bound (which gives us a prime of size at least  after k tries). I don’t see how to use the ABC conjecture to get beyond the exponent 2, though.

after k tries). I don’t see how to use the ABC conjecture to get beyond the exponent 2, though.

Comment by Terence Tao — August 9, 2009 @ 6:00 pm |

I think perhaps the interval [(log n)^10, (log n)^100] in my comment can be replaced with something like [ (log n)^(2 + epsilon), (log n)^100], through limiting k to size a little larger than log n, say k ~ (log n)^(1 + eps/2) or something — i.e. we don’t use all the prime divisors of numbers in [n,n+log n]. Getting this interval somewhat below (log n)^2 (so that the method need not require heavy conjectures like ABC) might well require generalizing the problem somewhat. I will think about it…