The previous research thread for the Polymath7 “Hot Spots Conjecture” project has once again become quite full, so it is again time to roll it over and summarise the progress so far.

Firstly, we can update the map of parameter space from the previous thread to incorporate all the recent progress (including some that has not quite yet been completed):

This map reflects the following new progress:

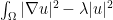

- We now have (or will soon have) a rigorous proof of the simplicity of the second Neumann eigenvalue for all non-equilateral acute triangles (this is the dotted region), thus finishing off the first part of the hot spots conjecture in all cases. The main idea here is to combine upper bounds on the second eigenvalue

(obtained by carefully choosing trial functions for the Rayleigh quotient), with lower bounds on the sum

of the second and third eigenvalues, obtained by using a variety of lower bounds coming from reference triangles such as the equilateral or isosceles right triangle. This writeup contains a treatment of those triangles close to the equilateral triangle, and it is expected that the other cases can be handled similarly.

- For super-equilateral triangles (the yellow edges) it is now known that the extreme points of the second eigenfunction occur at the vertices of the base, by cutting the triangle in half to obtain a mixed Dirichlet-Neumann eigenvalue problem, and then using the synchronous Brownian motion coupling method of Banuelos and Burdzy to show that certain monotonicity properties of solutions to the heat equation are preserved. This fact can also be established via a vector-valued maximum principle. Details are on the wiki.

- Using stability of eigenfunctions and eigenvalues with respect to small perturbations (at least when there is a spectral gap), one can extend the known results for right-angled and non-equilateral triangles to small perturbations of these triangles (the orange region). For instance, the stability results of Banuelos and Pang already give control of perturbed eigenfunctions in the uniform norm; since for right-angled triangles and non-equilateral triangles, the extrema only occur at vertices, and from Bessel expansion and uniform C^2 bounds we know that for any perturbed eigenfunction, the vertices will still be local extrema at least (with a uniform lower bound on the region in which they are extremisers), we conclude that the global extrema will still only occur at vertices for perturbations.

- Some variant of this argument should also work for perturbations of the equilateral triangle (the dark blue region). The idea here is that the second eigenfunction of a perturbed equilateral triangle should still be close (in, say, the uniform norm) to some second eigenfunction of the equilateral triangle. Understanding the behaviour of eigenfunctions nearly equilateral triangles more precisely seems to be a useful short-term goal to pursue next in this project.

But there is also some progress that is not easily representable on the above map. It appears that the nodal line of the second eigenfunction

may play a key role. By using reflection arguments and known comparison inequalities between Dirichlet and Neumann eigenvalues, it was shown that the nodal line cannot hit the same edge twice, and thus must straddle two distinct edges (or a vertex and an opposing edge). (The argument is sketched on the wiki.) If we can show some convexity of the nodal line, this should imply that the vertex straddled by the nodal line is a global extremum by the coupled Brownian motion arguments, and the only extremum on this side of the nodal line, leaving only the other side of the nodal line (with two vertices rather than one) to consider.

We’re now also getting some numerical data on eigenvalues, eigenfunctions, and the spectral gap. The spectral gap looks reasonably large once one is away from the degenerate triangles and the equilateral triangle, which bodes well for an attempt to resolve the conjecture for acute angled triangles by rigorous numerics and perturbation theory. The eigenfunctions also look reassuringly monotone in various directions, which suggests perhaps some conjectures to make in this regard (e.g. are eigenfunctions always monotone along the direction parallel to the longest side?).

This isn’t a complete summary of the discussion thus far – other participants are encouraged to summarise anything else that happened that bears repeating here.

[…] an active discussion in the last week or so, with almost 200 comments across two threads (and a third thread freshly opened up just now). While the problem is still not completely solved, I feel optimistic that it should […]

Pingback by Updates on the two polymath projects « What’s new — June 15, 2012 @ 10:22 pm |

Thanks, this summary is very helpful!

I’ve been pondering two suggestions on the previous thread, which concerned some sort of perturbation argument and the heat kernels. This got me to thinking about the Hankel function of the first kind, which is the fundamental solution

of the Helmholtz equation with the outgoing wave condition at infinity.

At least from the numerical data, it seems the second Neumann eigenvalue varies smoothly with perturbations

varies smoothly with perturbations  in angle.

in angle.

At an eigenvalue of the interior Neumann Laplacian, we can think of the eigenfunction as solving the Helmholtz equation with zero Neumann data. One could presumably write this

with zero Neumann data. One could presumably write this  in terms of a layer potential using the fundamental solution of the Helmholtz equation with wave number

in terms of a layer potential using the fundamental solution of the Helmholtz equation with wave number  , or some clever combination of the Calderon operators. I think the regularity of such operators on Lipschitz domains is documented, eg. in the books by Nedelec or Hsiao/Wendland or MacLean. I don’t know if the behaviour of these integral operators as

, or some clever combination of the Calderon operators. I think the regularity of such operators on Lipschitz domains is documented, eg. in the books by Nedelec or Hsiao/Wendland or MacLean. I don’t know if the behaviour of these integral operators as  varies is also characterized, but it most likely is.

varies is also characterized, but it most likely is.

Using these integral operators, one can reformulate the boundary value problem in terms of the solution of an integral equation, where

where  Suppose

Suppose  then is the desired Neumann Laplace eigenfunction.

then is the desired Neumann Laplace eigenfunction.

The boundary trace of will be given in terms of this density, and we know this trace is at least continuous. Can one say something about the composition of the trace operator with

will be given in terms of this density, and we know this trace is at least continuous. Can one say something about the composition of the trace operator with  , as epsilon varies?

, as epsilon varies?

Is there a clever reformulation of the Hot Spot conjecture in terms of these layer densities?

Comment by Nilima Nigam — June 15, 2012 @ 10:52 pm |

Some numerically computed 2nd Neumann eigenfunctions are in this public directory. Titles on the graphs give two of the angles (scaled by pi).

http://www.math.sfu.ca/~nigam/polymath-figures/EigenFunctions/

Comment by Nilima Nigam — June 15, 2012 @ 11:22 pm |

These are nice. Is it correct that alpha corresponds to the lower left corner and beta corresponds to the lower right corner? And I notice the triangles vary in shape from picture to picture… are the triangles in the figure actually drawn such that they have the correct angles? If so that definitely gives the full picture!

Also, maybe these pictures can shed light on the conjecture “are eigenfunctions always monotone along the direction parallel to the longest side?” proposed in the summary. It is a bit hard for me to make out exactly, but I think in triangles of the type “one hot two cold corners” (well often there is only one globally coldest corner and the other is only locally coldest) the level curves of roughly form concentric circles at each of the verticies.

roughly form concentric circles at each of the verticies.

If this is the case I believe such triangles disprove the conjecture: If the conjecture were true then, letting be a vector pointing in the direction of the longest side, you could always head in/against the direction

be a vector pointing in the direction of the longest side, you could always head in/against the direction  to achieve ever hotter/colder values. I think this precludes three local extrema at the corners.

to achieve ever hotter/colder values. I think this precludes three local extrema at the corners.

(I am not explaining this very well but basically I have in mind the sort of argument used in Linear Programming to prove that if you have a linear function and you want to maximize it over a convex polygon the maximum occurs at a vertex of the polygon. The argument goes that you head in the direction of the gradient of the function and eventually you ram against the wall, keep going, and end up in a corner.)

Comment by Chris Evans — June 16, 2012 @ 8:25 am |

Hi Chris,

– I’ve put low-resolution thumbnails of these runs on one page for easier viewing, you should be able to click on any to get the detailed plot (again somewhat low-resolution, .png seems to compress awkwardly)

http://people.math.sfu.ca/~nigam/polymath-figures/eigenpics.html

– Each triangle here corresponds to a different choice of parameter angles.

– On each plot, I’ve marked the location of the max(|u|) by a green star. You’ll notice there is only one such point in each triangle plotted, and it occurs on the vertex.

– Yes. If the nodal line doesn’t go through the vertex, the level curves of u, if you zoom in enough to the corners, are nearly concentric circles. This goes all the way back to some comment in an older thread: locally near a corner, the eigenfunction looks like that of a wedge, ie, like a P_n(r,\theta) . Near a corner I think it’s a combination of Bessel functions in the distance from the corner, and the angular variable.

– I just noticed the titles on my figures (generated by outputting some run-time data) are not scaled correctly. I can re-run the code to change the titles.

Comment by Nilima Nigam — June 16, 2012 @ 2:37 pm |

even more at http://people.math.sfu.ca/~nigam/polymath-figures/eigen2.html

Comment by Nilima Nigam — June 16, 2012 @ 4:31 pm |

Ah, you’re right, the conjecture is false. Around any acute vertex, if the eigenfunction takes the value at that vertex, then the eigenfunction has the asymptotic expansion

at that vertex, then the eigenfunction has the asymptotic expansion  where r is the distance to that vertex, so the level sets locally are indeed close to circles. (This was concealed in the super-equilateral isosceles case because the non-extreme vertex had

where r is the distance to that vertex, so the level sets locally are indeed close to circles. (This was concealed in the super-equilateral isosceles case because the non-extreme vertex had  .) That’s a pity – now I don’t know if there are any plausible monotonicity conjectures one can make that would be valid for all acute triangles.

.) That’s a pity – now I don’t know if there are any plausible monotonicity conjectures one can make that would be valid for all acute triangles.

Comment by Terence Tao — June 16, 2012 @ 5:15 pm |

Hi Terry,

I discussed with Chris this afternoon and I’m still worried about the PDE proof of Corollary 4. Sorry that I have come back to this point many times.

The point again is about the reflection part. It is too good to be true. The solution of the heat equation is OK when you do even reflection. However, the gradient of the new solution in the reflected region may not stay in the convex sector anymore.

anymore.

Let me give a clear example. Assume that we have with

with  . Let

. Let

be the even reflection of

be the even reflection of  , i.e.

, i.e.  and

and  . Then

. Then  . So

. So  may not be in

may not be in  for

for  anymore.

anymore.

Comment by Hung Tran — June 16, 2012 @ 12:41 am |

Yes, the gradient of may leave S in the reflected region, but this is not relevant to the argument, as one only needs the gradient to lie in S (or in

may leave S in the reflected region, but this is not relevant to the argument, as one only needs the gradient to lie in S (or in  ) in the original domain. (In particular, when one discusses things like “the first time the gradient touches the boundary of

) in the original domain. (In particular, when one discusses things like “the first time the gradient touches the boundary of  , it is understood that one is working in the original domain.)

, it is understood that one is working in the original domain.)

Comment by Terence Tao — June 16, 2012 @ 2:59 am |

Then how could you conclude that points inward or tangentially where

points inward or tangentially where  is the first point that

is the first point that  touches the boundary of

touches the boundary of  ? As now, in the neighborhood of

? As now, in the neighborhood of  ,

,  takes values not only in

takes values not only in  or

or  .

.

Comment by Hung Tran — June 16, 2012 @ 3:38 am |

Ah, right. If one is working on a boundary point , then as you say, in a neighbourhood of that point,

, then as you say, in a neighbourhood of that point,  will live either in

will live either in  or its reflection, but both of these sets (assuming for sake of concreteness that S is oriented in the first quadrant, with one side on the x axis) lie to the right of where

or its reflection, but both of these sets (assuming for sake of concreteness that S is oriented in the first quadrant, with one side on the x axis) lie to the right of where  will be, which in this case is

will be, which in this case is  . So

. So  will have a non-negative first component and so will point inwards of

will have a non-negative first component and so will point inwards of  (or of its reflection).

(or of its reflection).

Alternatively, one can argue entirely inside the original domain without reflection using directional derivatives (and the Neumann boundary condition) to get the inward/tangential nature of the Laplacian.

Comment by Terence Tao — June 16, 2012 @ 4:05 am |

Thank you. I will double-check this carefully. Just a quick comment that we want to have points inwards or tangentially to

points inwards or tangentially to  (NOT its reflection) in order to obtain the contradiction. So in this example, we need both of the components are non-negative.

(NOT its reflection) in order to obtain the contradiction. So in this example, we need both of the components are non-negative.

Anyway, I’ll move on to consider other directions.

Comment by Hung Tran — June 16, 2012 @ 5:22 am |

At the point , the outward normal to

, the outward normal to  is parallel to the boundary, so that

is parallel to the boundary, so that  and its reflection have the same normal (and thus the same notion of “inwards” and “tangentially”).

and its reflection have the same normal (and thus the same notion of “inwards” and “tangentially”).

Comment by Terence Tao — June 16, 2012 @ 5:48 am |

This is an important point. In fact as far as I can see it is the only place in the proof where it is used that the axes of S are oriented exactly along the directions of DB and AB.

I tried reproducing the argument for a triangle with Neumann conditions in the three edges. Setting to be the sector corresponding to the exterior of one of the angles in a triangle, I tried to check whether if when the initial gradients are all in

to be the sector corresponding to the exterior of one of the angles in a triangle, I tried to check whether if when the initial gradients are all in  , they would stay in

, they would stay in  . Note that this would imply some monotonocity along the three edges.

. Note that this would imply some monotonocity along the three edges.

The same argument goes through as long as these two edges form an obtuse or right angle. It is exactly the same proof, but it fails for acute angles.

The way it fails for acute angles is funny. It is exactly because the prolongation of the edges of do not intersect the boundary

do not intersect the boundary  perpendicularly. It is necessary that these two intersect perpendicularly to make Terry’s reflection argument work.

perpendicularly. It is necessary that these two intersect perpendicularly to make Terry’s reflection argument work.

I guess that in light of thread 3 we should not expect a monotonicity result like this one to hold for acute triangles.

Comment by Luis S — June 18, 2012 @ 9:12 pm |

I’ve been reading the latest summary, and I’m not sure what point 3. above and the orange region say about the hot spots conjecture for almost 30-60-90 triangles, in a neighbourhood in parameter space of point above .

above .

I followed the link in the summary to (one of) the Banuelos and Pang papers. The abstract of the 2008 paper I glanced at mentioned a “snowflake type boundary” ; so, I can’t say I understand how the Banuelos and Pang paper from 2008 relates to the stability of the second eigenvalue & eigenfunction for the Neumann Laplacian in the orange region of the parameter space. Thanks for another illuminating summary.

Comment by meditationatae — June 16, 2012 @ 2:03 am |

Theorem 1.3 of the Banuelos-Pang paper gives continuity of the second eigenvalue, and Theorem 1.5 gives stability of the second eigenfunction, under the assumption of Gaussian bounds on heat kernels (which are certainly true for acute angled triangles) together with simplicity of the second value. (Banuelos-Pang developed these results with the intention of applying them to snowflake domains, but the results are more general than this.)

Comment by Terence Tao — June 16, 2012 @ 3:01 am |

Ok. Many thanks. That clears up my questions about the Banuelos-Pang paper.

David Bernier

Comment by meditationatae — June 16, 2012 @ 3:17 am |

Here is a basic way to get stability of the second eigenfunction. Consider an acute triangle with second eigenvalue

with second eigenvalue  and third eigenvalue

and third eigenvalue  , thus the Rayleigh quotient

, thus the Rayleigh quotient  for mean zero

for mean zero  is lower bounded by

is lower bounded by  , with equality when

, with equality when  is a scalar multiple of the second eigenfunction

is a scalar multiple of the second eigenfunction  , and lower bounded by

, and lower bounded by  when

when  is orthogonal to

is orthogonal to  .

.

Now consider a perturbed triangle for some linear transformation

for some linear transformation  close to the identity. The second eigenvalue of the perturbed triangle is the minimiser of the perturbed Rayleigh quotient

close to the identity. The second eigenvalue of the perturbed triangle is the minimiser of the perturbed Rayleigh quotient  , where

, where  is the self-adjoint matrix such that

is the self-adjoint matrix such that  .

.

Consider the second eigenfunction of the perturbed problem (viewed on the reference triangle

of the perturbed problem (viewed on the reference triangle  rather than on

rather than on  ), normalised to have L^2 norm 1. We can split it as

), normalised to have L^2 norm 1. We can split it as  , where

, where  has unit norm and is orthogonal to

has unit norm and is orthogonal to  (and also mean zero), and

(and also mean zero), and  are scalars with

are scalars with  . We will show that

. We will show that  , which shows that

, which shows that  is within

is within  in

in  norm of (a scalar multiple of) the original eigenfunction

norm of (a scalar multiple of) the original eigenfunction  when there is a large spectral gap.

when there is a large spectral gap.

Since minimises the perturbed Rayleigh quotient, one can compare it against the original eigenfunction

minimises the perturbed Rayleigh quotient, one can compare it against the original eigenfunction  to obtain the inequality

to obtain the inequality

Expanding out , noting from integration by parts that

, noting from integration by parts that  is orthogonal to

is orthogonal to  , and using

, and using  , then after some algebra one ends up at

, then after some algebra one ends up at

After some Cauchy-Schwarz (bounding by 1 and recalling that

by 1 and recalling that  ) this becomes

) this becomes

Now, one can bound the factors on the RHS by

factors on the RHS by  . If one does this and rearranges for a while, one eventually ends up with the bound

. If one does this and rearranges for a while, one eventually ends up with the bound

(assuming small enough); since

small enough); since  , this gives the bound on

, this gives the bound on  .

.

This type of perturbation analysis measures the perturbation in L^2 and in H^1 with quite explicit bounds. What one really wants though is uniform (or even better, C^1 or C^2) bounds, as this gives enough control to start tracking where the extrema go, but I think one may be able to get that from the L^2 and H^1 bounds by using the Bessel function expansion formalism (but the bounds get worse when doing so, unfortunately). Still, one may be able to work everything out explicitly for, say, perturbations of the 30-60-90 triangle, so that we can get an explicit neighbourhood of that triangle for which one can establish the conjecture.

Comment by Terence Tao — June 16, 2012 @ 3:53 am |

One thing I realized about the above analysis is that it also works when there is multiplicity of the second eigenvalue, and in particular when is the equilateral triangle. In this case,

is the equilateral triangle. In this case,  becomes the projection of

becomes the projection of  to the second eigenspace (normalized to have norm one). So this should imply that for a perturbed equilateral triangle, the second eigenfunction is close to some second eigenfunction of the equilateral triangle in H^1 norm at least. Integration by parts also gives uniform H^3 bounds on this eigenfunction, so by some interpolation (Gagliardo-Nirenberg type inequalities) should show that the second eigenfunction of the perturbed eigenfunction is close in C^0 (and probably also C^1) to a second eigenfunction of the equilateral triangle. I think I can show that for all second eigenfunctions of the equilateral triangle, the extrema only occur at the vertices (and are uniformly bounded away from the extrema once one is a bounded distance away from the vertices), so this should indeed establish the claim stated previously that the extrema can only occur at the vertices for perturbed equilateral triangles.

to the second eigenspace (normalized to have norm one). So this should imply that for a perturbed equilateral triangle, the second eigenfunction is close to some second eigenfunction of the equilateral triangle in H^1 norm at least. Integration by parts also gives uniform H^3 bounds on this eigenfunction, so by some interpolation (Gagliardo-Nirenberg type inequalities) should show that the second eigenfunction of the perturbed eigenfunction is close in C^0 (and probably also C^1) to a second eigenfunction of the equilateral triangle. I think I can show that for all second eigenfunctions of the equilateral triangle, the extrema only occur at the vertices (and are uniformly bounded away from the extrema once one is a bounded distance away from the vertices), so this should indeed establish the claim stated previously that the extrema can only occur at the vertices for perturbed equilateral triangles.

Comment by Terence Tao — June 16, 2012 @ 5:01 pm |

This would be great, and I think one can get some numerical evidence for the desired uniform bounds. I can compute the L^2-projection of an eigenfunction onto the eigenfunction of a nearby triangle. In other words, I can compute in your notation, and try to get a feel for the sup-norm bounds on derivatives, for perturbed eigenfunctions in the neighbourhood of a few different ‘reference’ triangles. I’ll fix one side of the triangles to be 1.

in your notation, and try to get a feel for the sup-norm bounds on derivatives, for perturbed eigenfunctions in the neighbourhood of a few different ‘reference’ triangles. I’ll fix one side of the triangles to be 1.

I had a quick question. In your argument, the asymptotic constant in the bound for presumably depends on the measure of the original acute triangle,

presumably depends on the measure of the original acute triangle,  Let’s call it

Let’s call it

to get big.

to get big.

In your series of calculations above, do you recall whether the constant scaled as the area or the square root of the area? Once again, I ask because this will help me decide how small to choose the neighbourhoods of the reference triangles- we don’t want

[Fixed latex. Incidentally, there was an error in the latex instructions on this blog (now fixed) – one needs to enclose latex commands in “$latex” and “$” rather than “<em>” and “</em>”. -T.]

Comment by Nilima Nigam — June 16, 2012 @ 7:23 pm |

The implied constants in the above analysis are actually dimensionless – they don’t depend on the area of . Instead, they depend on the operator norm of the perturbing matrix

. Instead, they depend on the operator norm of the perturbing matrix  .

.

Comment by Terence Tao — June 16, 2012 @ 7:29 pm |

So this constant is uniform for all acute triangles? In other words, if I perturb *any* triangle by an affine map which is close to the identity, the same bound

by an affine map which is close to the identity, the same bound  should hold? This would be good news for purposes of coding.

should hold? This would be good news for purposes of coding.

Comment by Nilima Nigam — June 16, 2012 @ 9:22 pm |

I wrote a more careful computation at http://michaelnielsen.org/polymath1/index.php?title=Stability_of_eigenfunctions . The upshot is that if one takes a perturbation of the triangle

of the triangle  , and considers a second eigenfunction of

, and considers a second eigenfunction of  which (after rescaling back to

which (after rescaling back to  ) takes the form

) takes the form  for some second eigenfunction

for some second eigenfunction  of

of  of norm 1, and some v orthogonal to u_2, then

of norm 1, and some v orthogonal to u_2, then

provided that the denominator is positive, where is the condition number of B, and

is the condition number of B, and  is first Neumann eigenvalue that is strictly greater than

is first Neumann eigenvalue that is strictly greater than  . (This is slightly different from what I said before; the factor of

. (This is slightly different from what I said before; the factor of  in my previous post should have instead been a

in my previous post should have instead been a  .)

.)

Comment by Terence Tao — June 17, 2012 @ 12:02 am |

Thank you, and also for the detailed notes on the wiki. This is a relief. The previous bound did not appear to hold in some numerics I ran.

Comment by Nilima Nigam — June 17, 2012 @ 3:02 am |

For what it’s worth, here is Terry’s argument, with calculations done in terms of functions pulled back to an isoceles right-angled triangle. http://people.math.sfu.ca/~nigam/polymath-figures/Perturbation.pdf

Comment by Nilima Nigam — June 19, 2012 @ 11:20 pm |

Here is a Mathematica notebook with calculations for the proof of simplicity. This is not the full proof. It reduces the problem to solving a set linear inequalities. There are 2 cases. I am also attaching a plot with acute triangles as yellow area and red/blue as the cases. Clearly everything is covered. Now I just need to find the cleanest way to handle those inequalities.

http://pages.uoregon.edu/siudeja/TrigInt.m (this one is needed to run the notebook)

http://pages.uoregon.edu/siudeja/simple.nb (first line needs to be edited)

Click to access simple_plot.pdf

The first case uses 3 reference triangles and rotated symmetric eigenfunction of equilateral for upper bound. The second case uses 2 reference triangles and Cheng’s bound.

Comment by Bartlomiej Siudeja — June 16, 2012 @ 6:50 pm |

I’ve been thinking about how one would try to use rigorous numerics to verify the hot spots conjecture for acute-angled triangles. In previous discussion, we proposed covering the parameter space with a mesh of reference triangles, getting rigorous bounds on the eigenfunctions and eigenvalues of these reference triangles, and then using stability analysis to then cover the remainder of parameter space. This would be tricky though, in part because we would need rigorous bounds for the finite element schemes for each of the reference triangles.

But another possibility is to only use for the reference triangles the triangles for which explicit formulae for the eigenfunctions and eigenvalues are available, namely the equilateral triangles, the isosceles right-angled triangles, the 30-60-90 triangles, and the thin sector (a proxy for the infinitely thin isosceles triangle). In other words, to use the red dots in the above diagram as references.

The trouble is that if one does a naive perturbation analysis, one could only hope to verify the hot spots conjecture in small neighborhoods of each red dot, which would not be enough to cover the entire parameter space. But I think, in principle at least, that one has a way to amplify the perturbation analysis to get much larger neighborhoods of each reference triangle, by using not just the lowest eigenvalue and eigenspace of the reference triangle, but the first k eigenvalues and first k eigenspaces for some medium-sized k (e.g. k = 100). This would necessitate the use of 100 x 100 linear algebra (e.g. minimizing a quadratic form on 100 variables) but this seems well within the capability of rigorous numerics. As long as the strength of one’s perturbation analysis grows at a reasonable rate with k, this may well be enough to rigorously cover the entire parameter space.

For instance, consider perturbing off of the isosceles right triangle with vertices (0,0), (0,1), (1,1). The eigenfunctions here are quite explicit (because one can reflect the triangle indefinitely to fill out the plane periodically with periods (0,2), (2,0), and then use Fourier series): every pair of integers (k,l) gives rise to an eigenfunction

with vertices (0,0), (0,1), (1,1). The eigenfunctions here are quite explicit (because one can reflect the triangle indefinitely to fill out the plane periodically with periods (0,2), (2,0), and then use Fourier series): every pair of integers (k,l) gives rise to an eigenfunction

with eigenvalue . Thus, for instance, the second eigenfunction is (up to constants)

. Thus, for instance, the second eigenfunction is (up to constants)  with eigenvalue

with eigenvalue  , the next eigenfunction is

, the next eigenfunction is  with eigenvalue

with eigenvalue  , the next eigenfunction is

, the next eigenfunction is  with eigenvalue

with eigenvalue  , and so forth (the next eigenvalue, for instance, is

, and so forth (the next eigenvalue, for instance, is  ).

).

As discussed in the wiki, if one wants to find the second eigenfunction for a perturbed triangle , this amounts to minimizing the quadratic form

, this amounts to minimizing the quadratic form

amongst mean zero functions of norm 1, where . This is a quadratic minimization problem on an infinite-dimensional Hilbert space. However, one can restrict u to a finite-dimensional subspace, namely the space generated by the first k eigenvalues of the reference triangle. For instance, one could consider u which are a linear combination of

. This is a quadratic minimization problem on an infinite-dimensional Hilbert space. However, one can restrict u to a finite-dimensional subspace, namely the space generated by the first k eigenvalues of the reference triangle. For instance, one could consider u which are a linear combination of  ,

,  , and

, and  . This minimization problem could be computed with rigorous numerics without difficulty.

. This minimization problem could be computed with rigorous numerics without difficulty.

What I believe to be true is that there is some rigorous stability inequalities that relate the minimizers of the finite-dimensional variational problem with the minimizers of the infinite-dimensional problem, with the error bounds improving in k (roughly speaking, I expect the H^1 norm of the error to decay like or even

or even  ). By increasing k, one thus gets quite good rigorous control on where the minimizers are.

). By increasing k, one thus gets quite good rigorous control on where the minimizers are.

Unfortunately, the H^1 norm is not good enough to control where the extrema go: one would prefer to have control in a norm such as C^0 or (even better) C^1. But it should be possible to use Bessel function expansions and the eigenfunction equation to convert H^1 control of an eigenfunction to C^0 or C^1 control, though this could get a bit messy with regards to the explicit constants in the estimates. But in principle, this provides a completely rigorous way to control the eigenfunction in arbitrarily large neighborhoods of reference triangles in parameter space, and so for some sufficiently large but finite k (e.g. k=100) one may be able to cover everything rigorously.

Of course, it would be preferable to have a more conceptual way to prove hot spots than brute force numerical calculation (since, among other things, a conceptual argument would be easier to generalize to other domains than triangles), but I think the numerical route is certainly a viable option to pursue if we don’t come up with a promising conceptual attack on the problem.

Comment by Terence Tao — June 17, 2012 @ 10:26 pm |

I’ve been pondering something similar. Even for a conceptual approach, there’s a duality between the problem of locating the extrema of eigenfunctions of

on a right-angled isoceles triangle and that of the hot spot conjecture. I’m of the view that a successful conceptual attack will deal with all the cases (except, maybe, the equilateral one), in one go.

on a right-angled isoceles triangle and that of the hot spot conjecture. I’m of the view that a successful conceptual attack will deal with all the cases (except, maybe, the equilateral one), in one go.

As far as numerics go, here was my thinking:

A) one can get very good approximations of both the initial part of the spectrum and eigenfunctions of a positive definite operator on a right-angled isoceles triangle, using non-polynomial functions in a spectral approach.

B) you had a perturbation analysis in thread 6 from one triangle to another, nearby one;

C) one can pull back both triangles in your analysis to a right-angled isoceles one, and do the perturbation analysis there. The constants include, explicitly, the information about the pullback. I am doing this to check if how big an \epsilon I was allowed to pick depended on the shape of the triangles. I’ll post a link to this (naive) write-up to the wiki soon.

For any numerical attack, I think it’s extremely important to record how the eigenvalues are being located, or how the minimizer is being found.

For (A) I use approximations where the basis functions are not piecewise polynomials. I’m writing up both a Fourier spectral method and a method based on Bessel functions. This is in the debugging stage, and I will wait for better results before I upload details.

where the basis functions are not piecewise polynomials. I’m writing up both a Fourier spectral method and a method based on Bessel functions. This is in the debugging stage, and I will wait for better results before I upload details.

Comment by Nilima Nigam — June 17, 2012 @ 11:07 pm |

what about a partition-of-unity approach, where one takes 4 patches (3 which include the corners, and one for the center)? we’ve got great control of what happens near the corners.

Comment by Nilima Nigam — June 17, 2012 @ 11:10 pm |

I apologize about not describing what I meant by a spectral approach. On a square, I know that are orthogonal. So one writes the approximation as

are orthogonal. So one writes the approximation as  , writes a discrete variant of the eigenproblem, and solves it for the unknown coefficients. On clearly has to take the basis functions with the correct symmetry, while working on the triangle. These basis functions don’t satisfy the prescribed boundary conditions, so the discrete formulation has to be carefully done.

, writes a discrete variant of the eigenproblem, and solves it for the unknown coefficients. On clearly has to take the basis functions with the correct symmetry, while working on the triangle. These basis functions don’t satisfy the prescribed boundary conditions, so the discrete formulation has to be carefully done.

Comment by Nilima Nigam — June 17, 2012 @ 11:21 pm |

One advantage of the quadratic form minimization approach is that one does not need to explicitly keep track of boundary conditions, as the minimizer to the quadratic form will automatically obey the required Neumann conditions. It’s true though that it isn’t absolutely necessary to use the reference eigenfunctions as the basis for the approximation, and that other bases could be better (for instance, in the spirit of what was done to show simplicity of eigenvalues, one could take an overdetermined basis consisting of eigenfunctions pulled back from multiple reference triangles). This would of course make the rigorous stability and perturbation analysis more complicated, though, so some there is some tradeoff here. I guess we could do some numerical experiments to probe the efficiency of various types of bases for approximate eigenfunctions.

Comment by Terence Tao — June 18, 2012 @ 12:04 am |

The most efficient set of approximations functions I’ve found (in terms of fewest non-zero coefficients) are: some Bessel functions centered at the three corners, and then I threw in some trigonometric polynomials. These aren’t orthogonal, and I’d have to show the discrete problem was consistent with the original one.

Comment by Nilima Nigam — June 18, 2012 @ 12:46 am |

All explicitly known eigenfunctions for triangles are trigonometric polynomials (equilateral, right isosceles and 30-60-90. So a basis made of linear perturbations of these is very reasonable. However, transplantation of known cases gives extremely ugly Rayleigh quotients on arbitrary triangles. Trig functions do not mix well. But, this can be done. That is how I am proving simplicity.

For nearly degenerate triangles Bessel functions must be best (Cheng’s bound is obtained this way), but linear functions, varying along long side, adjusted to have average 0 are really good too. After all sides are almost perpendicular and variation must happen along the long side. For simplicity of nearly degenerate triangles I am using linear function (it gives slightly better results for not-so-nearly-degenerate cases.

Comment by Bartlomiej Siudeja — June 18, 2012 @ 3:58 pm |

This reminds me of a small remark I wanted to make on the nearly degenerate case. Instead of approximating by a thin sector, one can instead rescale to the right-angled triangle with corners (0,0), (1,0), (0,1), in which case one is trying to minimise a Rayleigh quotient the numerator of which looks something like

with corners (0,0), (1,0), (0,1), in which case one is trying to minimise a Rayleigh quotient the numerator of which looks something like  for some large A (this is the formula for a thin right-angled triangle, in general there will also be a mixed term involving

for some large A (this is the formula for a thin right-angled triangle, in general there will also be a mixed term involving  which I will ignore for this discussion). One can then map this to the unit square S with corners (0,0), (1,0), (0,1), (1,1) via the transformation

which I will ignore for this discussion). One can then map this to the unit square S with corners (0,0), (1,0), (0,1), (1,1) via the transformation  , in which case the numerator now becomes

, in which case the numerator now becomes  and the denominator

and the denominator  . Morally, when A is large the second term in this numerator forces u to be nearly indepent of y. On the other hand, to minimise

. Morally, when A is large the second term in this numerator forces u to be nearly indepent of y. On the other hand, to minimise  assuming mean zero one easily computes that the minimiser comes from our old friend, the Bessel function

assuming mean zero one easily computes that the minimiser comes from our old friend, the Bessel function  with eigenvalue

with eigenvalue  . It is then tempting to analyse this quotient using a product basis consisting of tensor products of Bessel functions in the x variable and cosines in the y variable, i.e.

. It is then tempting to analyse this quotient using a product basis consisting of tensor products of Bessel functions in the x variable and cosines in the y variable, i.e.  for various integers k,l. Among other things this should give an alternate proof of the hot spots conjecture in the nearly degenerate case, though I didn’t work through the details.

for various integers k,l. Among other things this should give an alternate proof of the hot spots conjecture in the nearly degenerate case, though I didn’t work through the details.

Comment by Terence Tao — June 18, 2012 @ 5:25 pm |

I like this line of thinking.

Using a basis of the form is pretty much what I’m coding up, and is the heart of the method of particular solutions (Fox-Henrici-Moler, Betcke-Trefethen and later Barnett-Betcke).

is pretty much what I’m coding up, and is the heart of the method of particular solutions (Fox-Henrici-Moler, Betcke-Trefethen and later Barnett-Betcke).

Comment by Nilima Nigam — June 18, 2012 @ 6:05 pm |

After doing some theoretical calculations, I’m now coming around to the opinion that bases of trigonometric polynomials in a reference triangle are not as accurate as one might hope for, unless the triangle being analysed is very close to the reference triangle. The problem lies in the boundary conditions: an eigenfunction in a triangle is going to be smooth except at the boundary, but when one transplants it to the reference triangle (say, the 45-45-90 triangle for sake of argument) and then reflects it into a periodic function (in preparation for taking Fourier series) it will develop a sawtooth-like singularity at the edges of the reference triangle due to the slightly skew nature of the boundary conditions (distorted Neumann conditions). As a consequence, the Fourier coefficients decay somewhat slowly: a back-of-the-envelope calculation suggests to me that even if one uses all the trig polynomials of frequency up to some threshold K (so, one is using about K^2 different trig polynomials in the basis), the residual error in approximating the sawtooth-type eigenfunction in this basis is about in L^2 norm, and

in L^2 norm, and  in H^1 norm, if the triangle is within

in H^1 norm, if the triangle is within  of the reference triangle. This is pretty lousy accuracy, in practice it suggests that unless

of the reference triangle. This is pretty lousy accuracy, in practice it suggests that unless  is very small, one would need to take K in the thousands (i.e. work with a basis of a million or so plane waves) to get usable accuracy.

is very small, one would need to take K in the thousands (i.e. work with a basis of a million or so plane waves) to get usable accuracy.

So it may be smarter after all to try to use a basis that matches the boundary conditions better, even if this makes the orthogonality or the explicit form of the basis more complicated.

Comment by Terence Tao — June 20, 2012 @ 8:18 pm |

yes, the trig functions are pretty inefficient here. Here’s what works (without proof right now, so I won’t post results): take some bessel functions around the corners, add in some trig functions. take a linear combination. evaluate the normal derivatives at points on the boundary, and enforce that the linear combination does not vanish at randomly selected points in the interior. set up an overdetermined system (ie, take more points than unknowns), find the QR decomposition of the matrix. this gives you orthogonal columns, which correspond to a discrete orthogonal basis. use this to solve for the eigenvalues and eigenfunctions. it’s a tweak of what Trefethen and Betcke did, and the results are not as spectacular. but I can get results with 30 unknowns that I can’t using a basis of 200 purely trig functions.

Comment by Nilima Nigam — June 20, 2012 @ 8:52 pm |

Hmm, I can see that this scheme would give good numerical results, but obtaining rigorous bounds on the accuracy of the numerical eigenfunctions obtained in this manner could be tricky.

One possibility is to compute the residual of the numerically computed eigenvalue

of the numerically computed eigenvalue  and eigenfunction

and eigenfunction  . Assuming

. Assuming  is smooth enough (e.g.

is smooth enough (e.g.  except at the vertices, where the third derivative blows up slower than

except at the vertices, where the third derivative blows up slower than  ) and obeys the Neumann boundary conditions exactly, we have the expansion

) and obeys the Neumann boundary conditions exactly, we have the expansion

where are the true orthonormal eigenbasis of the Neumann Laplacian. If

are the true orthonormal eigenbasis of the Neumann Laplacian. If  , this implies in particular that

, this implies in particular that

where . Thus, an

. Thus, an  bound on the residual, together with a sufficiently good rigorous lower bound on the third eigenvalue (in particular producing a gap between this eigenvalue and the numerical second eigenvalue

bound on the residual, together with a sufficiently good rigorous lower bound on the third eigenvalue (in particular producing a gap between this eigenvalue and the numerical second eigenvalue  ), gives an

), gives an  bound on the error:

bound on the error:

One can also get control on lower order terms from the Poincare inequality, thus

for i=0,1,2.

This way, we don't have to do any analysis at all of the numerical scheme that comes up with the numerical eigenvalue and eigenfunction , so long as we can rigorously compute the residual. Note from Sobolev embedding that H^2 controls C^0, so in principle this is enough control on the error to start locating extrema…

, so long as we can rigorously compute the residual. Note from Sobolev embedding that H^2 controls C^0, so in principle this is enough control on the error to start locating extrema…

Comment by Terence Tao — June 20, 2012 @ 11:11 pm |

I agree, the numerical analysis is gnarly, Betcke and Barnett did it for the Dirichlet case on analytic domains for the method of particular solutions http://arxiv.org/pdf/0708.3533.pdf

For the finite element calculations and the more recent attacks, I’ve been keeping track of the numerical residual . I can calculate the I’ve also been keeping track of the (numerical) spectral gap.

. I can calculate the I’ve also been keeping track of the (numerical) spectral gap.

The devil is in the detail: for the finite element calculations, I can compute the integrals analytically (piecewise polynomials on triangles). For the new bessel+trig calculations, I can’t compute the integrals analytically. So for the latter, the residuals are computed through high-accuracy quadrature, but not analytically.

Comment by Nilima Nigam — June 20, 2012 @ 11:57 pm |

The second eigenfunction of the Neumann Laplacian for the![[0, 1]](https://s0.wp.com/latex.php?latex=%5B0%2C+1%5D&bg=ffffff&fg=000000&s=0&c=20201002) is

is

.

.![[0, 1]](https://s0.wp.com/latex.php?latex=%5B0%2C+1%5D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

in the

in the![[0, 1]](https://s0.wp.com/latex.php?latex=%5B0%2C+1%5D&bg=ffffff&fg=000000&s=0&c=20201002) , and using a

, and using a

from

from ![[0, 1]](https://s0.wp.com/latex.php?latex=%5B0%2C+1%5D&bg=ffffff&fg=000000&s=0&c=20201002) to itself

to itself ,

,

-norm of the resulting

-norm of the resulting

unit interval

I’ve been thinking of trying to prove that the

equivalent of the hot spots conjecture for this

eigenfunction holds, i.e. that the max and min

are attained on the boundary of

The method of approach I had involved assuming

the supremum of the absolute value of

is attained only at a point

interior of

non-constant stretching/compressing map

to construct

followed by compressing/stretching the values of

so as to preserve the

function, while hopefully decreasing the Rayleigh quotient.

I’m moderately optimistic about this being feasible

for the unit interval’s second eigenfunction, but triangles

are another matter altogether.

I’d be grateful if someone could tell me how to

access and use the LaTex sandbox area for Polymath

blogs.

David

[The sandbox is at https://polymathprojects.org/how-to-use-latex-in-comments/ – T.]

Comment by meditationatae — June 18, 2012 @ 5:19 am |

I am not sure that I follow…

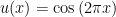

For the interval![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=000000&s=0&c=20201002) with Neumann boundary, the second eigenfunction is (I am pretty sure), as you say,

with Neumann boundary, the second eigenfunction is (I am pretty sure), as you say,  . Knowing that, the fact that

. Knowing that, the fact that  attains its max and min at the boundary points of

attains its max and min at the boundary points of ![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=000000&s=0&c=20201002) just follows from understanding the cosine function (e.g. sketching the graph of

just follows from understanding the cosine function (e.g. sketching the graph of  ). Maybe I am missing something (your edit below makes me think you instead wanted to suggest something about the third eigenfunction perhaps)?

). Maybe I am missing something (your edit below makes me think you instead wanted to suggest something about the third eigenfunction perhaps)?

But in any case, for the interval![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=000000&s=0&c=20201002) I believe that explicit expressions for the eigenfunctions are known (though of course coming up with an understanding of them that doesn’t rely on the explicit expressions might be useful in that it could be generalized to two dimensional triangles).

I believe that explicit expressions for the eigenfunctions are known (though of course coming up with an understanding of them that doesn’t rely on the explicit expressions might be useful in that it could be generalized to two dimensional triangles).

Comment by Chris Evans — June 18, 2012 @ 6:01 am |

I read some lecture notes online about Neumann boundary conditions, and as I recall, for the unit interval one is led to an ODE for the eigenvalues and eigenfunctions of the Neumann Laplacian. So, the solution via this route is easy. From Terry’s and others’ comments, explicit expressions for the first non-trivial eigenfunction of the Neumann Laplacian for triangular regions are only known for a tiny region of the parameter space, e.g. equilateral triangles, the 45-45-90 degrees triangle and quite a bit about isosceles triangles with a very pointy angle, using the known exact solutions for sectors. I haven’t used LaTex much recently, and that’s why I didn’t write all that much in my post. In any case, I can describe it more in words. For the unit interval, the on the unit interval is sufficiently well-behaved candidate function for being the second non-trivial eigenfunction, and I also assume that

on the unit interval is sufficiently well-behaved candidate function for being the second non-trivial eigenfunction, and I also assume that  has mean zero on the unit interval, as well as having derivative zero at

has mean zero on the unit interval, as well as having derivative zero at  and

and  . If needed, I also assume that $u$ has two distinct zeros in the unit interval, just as

. If needed, I also assume that $u$ has two distinct zeros in the unit interval, just as  does. Without loss of generality, we can assume that

does. Without loss of generality, we can assume that  is strictly positive near 0 and near 1, and that

is strictly positive near 0 and near 1, and that  is strictly negative in the open interval from

is strictly negative in the open interval from  to

to  , these last two real numbers denoting the first and second zeros of

, these last two real numbers denoting the first and second zeros of  on the unit interval. What I’m aiming at is a proof by contradiction of the analog of hot spots for the eigenfunction associated to the second non-trivial eigenvalue, where the domain of interest is simply the unit interval, rather than triangular regions. As you say, the solutions are explicitly known here and computing them is quite easy. So I’m working without assuming the knowledge of these explicit cosine solutions, relying more on physical intuition and the method/technique of Rayleigh quotients. In my previous post, I called

on the unit interval. What I’m aiming at is a proof by contradiction of the analog of hot spots for the eigenfunction associated to the second non-trivial eigenvalue, where the domain of interest is simply the unit interval, rather than triangular regions. As you say, the solutions are explicitly known here and computing them is quite easy. So I’m working without assuming the knowledge of these explicit cosine solutions, relying more on physical intuition and the method/technique of Rayleigh quotients. In my previous post, I called  the point in the unit interval where the maximum of the absolute value of the candidate function

the point in the unit interval where the maximum of the absolute value of the candidate function  is attained. So

is attained. So  results in smaller eigenvalues for the domain

results in smaller eigenvalues for the domain ![[0, K]](https://s0.wp.com/latex.php?latex=%5B0%2C+K%5D&bg=ffffff&fg=000000&s=0&c=20201002) . That’s one of the key things I keep in mind, because it reflects how the Rayleigh quotient changes when the graph of any well-behaved function

. That’s one of the key things I keep in mind, because it reflects how the Rayleigh quotient changes when the graph of any well-behaved function  is dilated or expanded by a factor of

is dilated or expanded by a factor of  along the x-axis ; we can assume that nothing happens along the y-axis. Introducing

along the x-axis ; we can assume that nothing happens along the y-axis. Introducing  which maps the unit interval to itself is a means to an end. The immediate objective is to get something like a perturbation of

which maps the unit interval to itself is a means to an end. The immediate objective is to get something like a perturbation of  , except that I usually think of pertubation as adding, say,

, except that I usually think of pertubation as adding, say,  to

to  , for some well-chosen

, for some well-chosen  and a small

and a small  . Suppose

. Suppose  is used to denote the point in the unit interval such that

is used to denote the point in the unit interval such that  or in other word,

or in other word,  . Then surely we want to have

. Then surely we want to have  and

and  . Locally near 0 and near 1, the Rayleigh quotient will be increased. We can deduce the value of the squared second derivative of the composite function

. Locally near 0 and near 1, the Rayleigh quotient will be increased. We can deduce the value of the squared second derivative of the composite function  at 0 and 1 from the assumption that

at 0 and 1 from the assumption that  is an eigenfunction. The only counterfactual assumption used (apart from benign requirements such as

is an eigenfunction. The only counterfactual assumption used (apart from benign requirements such as  having two zeros on the unit interval), is that there is a point

having two zeros on the unit interval), is that there is a point  in the interior of the unit interval such that

in the interior of the unit interval such that  and

and  . I think I’d like to keep constant the

. I think I’d like to keep constant the  -norm of the composite function. At

-norm of the composite function. At  , this requires decreasing further modification of the composite function by multiplying by

, this requires decreasing further modification of the composite function by multiplying by  , which while locally near

, which while locally near  will maintain the contribution to the

will maintain the contribution to the  -norm of the modified composite of

-norm of the modified composite of  with

with  , will locally decrease the square of the second derivative of

, will locally decrease the square of the second derivative of  . The hope is that, since by assumption

. The hope is that, since by assumption  is greater than

is greater than  and

and  , in total, the integral of the square of the modified composite of

, in total, the integral of the square of the modified composite of  with

with  will decrease, contradicting the Rayleigh principle. As I recall, the Rayleigh quotient method here requires that a candidate function for the second non-trivial eigenfunction both have mean zero, and be orthogonal to the first non-trivial eigenfunction. Also, the various

will decrease, contradicting the Rayleigh principle. As I recall, the Rayleigh quotient method here requires that a candidate function for the second non-trivial eigenfunction both have mean zero, and be orthogonal to the first non-trivial eigenfunction. Also, the various  values as x ranges over

values as x ranges over ![[0, 1]](https://s0.wp.com/latex.php?latex=%5B0%2C+1%5D&bg=ffffff&fg=000000&s=0&c=20201002) must have a mean of 1, by the fundamental theorem of calculus. The end result hoped for is that the modified function, while still satisfying the orthogonality requirements of the Rayleigh-Ritz method, have a strictly smaller Rayleigh quotient, where one proves this using a specially chosen

must have a mean of 1, by the fundamental theorem of calculus. The end result hoped for is that the modified function, while still satisfying the orthogonality requirements of the Rayleigh-Ritz method, have a strictly smaller Rayleigh quotient, where one proves this using a specially chosen  . Say u~ is the modified function obtained starting with u. Then u~ is in the admisible space, but has strictly lower Rayleigh quotient than u [Contradiction].

. Say u~ is the modified function obtained starting with u. Then u~ is in the admisible space, but has strictly lower Rayleigh quotient than u [Contradiction].

So, under the benign assumptions about two nodes for the second non-trivial eigenfunction, that eigenfunction attains its min and max at 0 or 1.

Comment by meditationatae — June 18, 2012 @ 8:21 am |

A couple of thoughts:

-Apriori I would guess that the second eigenfunction for the domain![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=000000&s=0&c=20201002) with Neumann boundary conditions has *one* zero not two. That is, I would guess that an analogue of the Nodal Line Theorem would hold and as such it would have two nodal domains. Indeed, the function

with Neumann boundary conditions has *one* zero not two. That is, I would guess that an analogue of the Nodal Line Theorem would hold and as such it would have two nodal domains. Indeed, the function  has one zero (at

has one zero (at  ) in the interval

) in the interval ![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=000000&s=0&c=20201002) (Edit: Clearly I am wrong here :-). See my reply below).

(Edit: Clearly I am wrong here :-). See my reply below).

-If I understand correctly, your idea is to argue by contradiction: If the second eigenfunction achieves an extremum in the interior of

achieves an extremum in the interior of ![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=000000&s=0&c=20201002) , then by considering an appropriately chosen

, then by considering an appropriately chosen ![\phi:[0,1]\to[0,1]](https://s0.wp.com/latex.php?latex=%5Cphi%3A%5B0%2C1%5D%5Cto%5B0%2C1%5D&bg=ffffff&fg=000000&s=0&c=20201002) , we can show that

, we can show that  in fact has a smaller Raleigh quotient than

in fact has a smaller Raleigh quotient than  (while still having mean zero), contradicting the fact that

(while still having mean zero), contradicting the fact that  minimizes this quotient. This is definitely an interesting idea… in principle it could work for a triangle, but we would need to find a function

minimizes this quotient. This is definitely an interesting idea… in principle it could work for a triangle, but we would need to find a function  with all these properties.

with all these properties.

Comment by Chris Evans — June 18, 2012 @ 9:19 pm |

I’ve been reading the mathematical parts of a technical report by Moo K. Chung and others (on applied mathematics and medical imaging) called: “Hot Spots Conjecture and Its Application to Modeling Tubular Structures” . For eigenfunctions of the Neumann Laplacian, their numbering in English starts with “first eigenfunction”, followed by “second eigenfunction”, where these are denoted respectively by and

and  .

.

It’s clear from the presentation in that report that is a non-zero constant function, whatever the domain. They state that the nodal set of the

is a non-zero constant function, whatever the domain. They state that the nodal set of the  eigenfunction

eigenfunction  divides the region or domain into at most

divides the region or domain into at most  sign domains.

sign domains.

For the unit interval, I believe that eigenfunction

eigenfunction  is given by the formula

is given by the formula  . I find that

. I find that  has roots at

has roots at  and

and  .

.

With respect to your second thought, a proof by contradiction is indeed what I have in mind. The laplacian of the composition of functions represented by seems to me to depend not only on

seems to me to depend not only on  , but also on

, but also on  . So an affine transformation map

. So an affine transformation map  , where

, where  is everywhere constant, and allowing mappings from a domain

is everywhere constant, and allowing mappings from a domain  to a possible different domain

to a possible different domain  for illustration, transforms the laplacian in a simple way (noting that

for illustration, transforms the laplacian in a simple way (noting that  is then identically zero.) In case

is then identically zero.) In case  is not affine, I think the relation between the laplacian of

is not affine, I think the relation between the laplacian of  composed with

composed with  and the laplacian of

and the laplacian of  isn’t easy to understand on an intuitive level …

isn’t easy to understand on an intuitive level …

Comment by meditationatae — June 19, 2012 @ 6:00 am |

Ah, you are correct… does indeed have two zeros at the places you state (I was thinking of

does indeed have two zeros at the places you state (I was thinking of  which has its zero at

which has its zero at  … and not

… and not  as I wrote). And I see I was also confused about the numbering… sorry about that!

as I wrote). And I see I was also confused about the numbering… sorry about that!

So you are considering the more general hot-spots problem then (showing that all of the eigenfunctions attain their extrema on the boundary)? Also, if you plan to argue by Raleigh quotients, you should only need to worry about first derivatives, no?

I played around a bit trying to make such an argument (for the first/second eigenfunction… i.e. for the simpler hot spots conjecture) but didn’t have any luck. An issue, as I see it, is that any argument has to account for the fact that there are examples of (non simply connected) domains which have the extrema of the first/second eigenfunction in the interior.

So any construction of would have to fail for that counter example domain. Then again, as

would have to fail for that counter example domain. Then again, as  in some sense “moves points around”, it may somehow be tied to the topology of the space… so maybe the construction of

in some sense “moves points around”, it may somehow be tied to the topology of the space… so maybe the construction of  would depend on the domain being simply connected? But this is just wild conjecture… I have nothing specific in mind.

would depend on the domain being simply connected? But this is just wild conjecture… I have nothing specific in mind.

One other idea/conjecture that came up when I was playing around with this: Perhaps it is possible to show that “there cannot be an interior maximum without an interior minimum”. This is a weaker statement than the full hot-spots conjecture, but if it were true and if we also knew that the nodal line, which straddles a corner, does so in a convex way then we would be done: In the convex sub-domain the extremum would lie at the corner, therefore in the other sub-domain the extremum must also lie on the boundary.

Comment by Chris Evans — June 19, 2012 @ 7:08 am |

When I thought of trying to argue for “hot spots” (for the unit interval) by contradiction, it just so happens that the first test case that came to mind was the eigenfunction for the Neumann laplacian , which has a minimum of

, which has a minimum of  at

at  and maxima of

and maxima of  at

at  and

and  . We would have to speak about “maximum of the absolute value of a non-trivial eigenfunction” rather than “maximum of a non-trivial eigenfunction” and “minimum of a non-trivial eigenfunction” , because for

. We would have to speak about “maximum of the absolute value of a non-trivial eigenfunction” rather than “maximum of a non-trivial eigenfunction” and “minimum of a non-trivial eigenfunction” , because for  , the minimum of

, the minimum of  is attained at

is attained at  only.

only. in an earlier comment, if for simplicity’s sake we stick to

in an earlier comment, if for simplicity’s sake we stick to  as the name of a hypothetical counter-example of “hot spots” or a variant of “hot spots” which refers to “points which extremize the absolute value of a non-trivial eigenfunction”, the way I was thinking of it, we would need to compute the second derivative (“laplacian”) of

as the name of a hypothetical counter-example of “hot spots” or a variant of “hot spots” which refers to “points which extremize the absolute value of a non-trivial eigenfunction”, the way I was thinking of it, we would need to compute the second derivative (“laplacian”) of  . From a mental computation using the “chain rule”, the first derivative of the composition of two functions is a product of two terms, one of which in our case would be

. From a mental computation using the “chain rule”, the first derivative of the composition of two functions is a product of two terms, one of which in our case would be  . Then differentiating again, the “product rule” will yield a term where

. Then differentiating again, the “product rule” will yield a term where  appears. If that is the case, does it matter? I think it does, in the sense that it makes “tweaking” the numerator of the Rayleigh quotient harder to do (the numerator involves the laplacian of a test function) . I might be overlooking something, so that I might not need to worry about

appears. If that is the case, does it matter? I think it does, in the sense that it makes “tweaking” the numerator of the Rayleigh quotient harder to do (the numerator involves the laplacian of a test function) . I might be overlooking something, so that I might not need to worry about  … You mention topology of the domain, for instance the property of “simple connectedness” of a domain , as possibly relevant/”decisive” for the “hot spots” conjecture to hold. I think it’s an interesting topic, how topology of the domain affects the truth or falsity of the “basic” conjecture, i.e. the part relating to extrema of

… You mention topology of the domain, for instance the property of “simple connectedness” of a domain , as possibly relevant/”decisive” for the “hot spots” conjecture to hold. I think it’s an interesting topic, how topology of the domain affects the truth or falsity of the “basic” conjecture, i.e. the part relating to extrema of  .

.

When arguing by Rayleigh quotients, including a domain transformation such as the

Comment by meditationatae — June 19, 2012 @ 4:39 pm |

Yes, the interval shows that the “generalized hot-spots conjecture” is not true for the interval. That is, after multiplying by if necessary, the second/third eigenfunction will have its hottest point in the interior. The same is true for a circular region I believe. But, as you say, the conjecture could be modified to consider the absolute value of

if necessary, the second/third eigenfunction will have its hottest point in the interior. The same is true for a circular region I believe. But, as you say, the conjecture could be modified to consider the absolute value of  .

.

Honestly, I haven’t much thought about the generalized hot-spots conjecture… the simple one (about the first/second Neumann eigenfunction) is hard enough!

Concerning the Raleigh quotient, I believe that for the interval it is given by![\mathcal{R}\left[u\right]=\frac{\int_0^1 (u_x)^2\ dx}{\int_0^1 u^2\ dx}](https://s0.wp.com/latex.php?latex=%5Cmathcal%7BR%7D%5Cleft%5Bu%5Cright%5D%3D%5Cfrac%7B%5Cint_0%5E1+%28u_x%29%5E2%5C+dx%7D%7B%5Cint_0%5E1+u%5E2%5C+dx%7D&bg=ffffff&fg=000000&s=0&c=20201002) and so only first derivatives are needed, no?

and so only first derivatives are needed, no?

Comment by Chris Evans — June 19, 2012 @ 5:49 pm |

Ah, you’re right. I just read about the energy functional and variational (calculus of variations) characterization of the first/second Neumann eigenfunction, and the expression to be minimized is just what you wrote in the last paragraph. So, under a transformation of domains, it seems like we don’t need to worry about the second derivative of the map from the domain to itself …

map from the domain to itself …

Comment by meditationatae — June 20, 2012 @ 6:46 am |

Some of the gory details of how to reformulate this problem under change of domain are on the wiki: http://michaelnielsen.org/polymath1/index.php?title=The_hot_spots_conjecture

Comment by Nilima Nigam — June 20, 2012 @ 2:15 pm

Correction to my latest comment: I wrote “second eigenfunction” , and following Terry Tao’s summary, “third eigenfunction” for the eigenfunction linked to seems like a better term.

seems like a better term.

Comment by meditationatae — June 18, 2012 @ 5:32 am |

I am slowly finishing the proof of simplicity. It may require a computer for some of the longest calculations. Especially certain large polynomial inequalities with 2 variables are not easy to handle. I have implemented an algorithm for proving these. I am attaching a Mathematica notebook and a pdf version of it. Algorithm can be found in Section 5 of http://www.ams.org/mathscinet-getitem?mr=MR2779073.

http://pages.uoregon.edu/siudeja/simple.nb

Click to access simple.nb.pdf

There are still 2 cases missing, but general idea should be clear. I have included comments in the notebook, so it should be relatively easy to read.

Comment by Bartlomiej Siudeja — June 18, 2012 @ 10:18 pm |

I have updated the files under above links. The proof is now complete. Eigenvalues are simple.

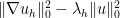

I have started working on the best possible lower bound for spectral gap for a given triangle. For now, I implemented an upper bound based on many eigenfunctions from known cases. There are lists of eigenfunctions of known cases sorted according to eigenvalues. There is also an upper bound based on a chosen number of eigenfunctions for each known case. There is certainly room for improvement, but the upper bound is already very accurate. There is however an issue of optimizing a linear combination of eigenfunctions. If I take 5 or 10 eigenfunctions per known case I get great results. For 15 per case, bound is actually worse, which means there are local minima.

Just for comparison. For a triangle with vertices (0,0), (1,0), (0.501,0.85) (slightly off from equilateral) I am getting 17.62110831277495 as the upper bound. Using second order FEM with 262144 triangles I am getting 17.62110794. Nilima, could you compare this with your results?

Comment by Bartlomiej Siudeja — June 19, 2012 @ 6:26 pm |

sure, I can run this case. Do you mean the 2nd computed eigenvalue is 17.62110794? or the spectral gap?

Comment by Nilima Nigam — June 19, 2012 @ 6:39 pm |

The second eigenvalue. The third is 18.1342622359. So the gap is about 0.5 (numerically).

Comment by Bartlomiej Siudeja — June 19, 2012 @ 6:41 pm |

I just ran it with P1 elements, 80358 nodes. Ev = 17.6212. If I use 320844 nodes, the ev = 17.62110632.

You’re using a higher-order element, so I think our results are comparable.

Comment by Nilima Nigam — June 19, 2012 @ 7:29 pm |

I have updated the files again. There is a lower bound for the gap at the end of the notebook. For any triangle I tried, it gives about 60% of the numerical gap. The problem is of course with the lower bound for the third eigenvalue. The upper bound for the second eigenvalue is very good, even with just 3 eigenfunctions per known case.

Comment by Bartlomiej Siudeja — June 20, 2012 @ 2:44 am |

60% is the worst case. For known cases it gives exact values.

Comment by Bartlomiej Siudeja — June 20, 2012 @ 3:48 am |

Hi Bartolomiej,

this is good news! I had a question: how much sharper are your bounds on the second eigenvalue, compared with those you’d get from the Poincare inequality here (the functions have mean zero, so one can get a pretty explicit bound). Maybe this is already in your posted notes?

Comment by Nilima Nigam — June 20, 2012 @ 3:45 pm |

Poincare inequality gives lower bound for the second eigenvalue, instead of upper. Optimal Poincare inequality for triangles gives lower bound in terms of just second equilateral eigenvalue. Same techniques as for lower bound in my notes, just less involved, work for Poincare.

Comment by Bartlomiej Siudeja — June 20, 2012 @ 3:54 pm |

Yes, I was thinking of the lower bound. However, do the techniques in your notes give tighter lower bounds than those given by a Poincare inequality?

Comment by Nilima Nigam — June 20, 2012 @ 6:14 pm |

Together with Laugesen we used the same techniques to prove optimal Poincare inequality. However, here I had to push the method to its limits (more reference triangles). Bounds are also a bit different, since we are dealing with second+third eigenvalue.

Comment by Bartlomiej Siudeja — June 20, 2012 @ 7:23 pm |

I had a thought about a somewhat different tactic, which combines some of the ones discussed earlier. Let us consider the case of triangles with rational angles. These are dense in the set of all triangles, so if we can prove the Hot Spots conjecture for this case, it seems to me that we can easily make continuity arguments to the general case. ), whereas every other point is (locally) exposed to a single neighborhood, the corners must be hot spots? (E.g. move the source towards a given corner and look at the long-term behavior.)

), whereas every other point is (locally) exposed to a single neighborhood, the corners must be hot spots? (E.g. move the source towards a given corner and look at the long-term behavior.)

Now, consider the universal cover of such a triangle under the reflection group. First off, is it the case that this is an N-sheeted manifold, for some N a factor of the gcd of the denominators?

Second, we can consider the Brownian motion formulation pulled back to this cover, where each preimage of the triangle has a point heat source. Can’t we argue that, since the corners are effectively exposed to “multiple” neighborhoods (N * angle /

This argument will fail if N=1. But there are only 3 such cases (the equilateral, isoceles right, and 30-60-90 triangles), and all of them have the Hot Spots conjecture verified.

Comment by Craig H — June 19, 2012 @ 9:07 pm |

I think I may be off on my definition of N and my neighborhood counting — but if N is finite, it shouldn’t matter.

Comment by Craig H — June 19, 2012 @ 9:10 pm |

I don’t quite follow the argument that being exposed to multiple neighborhoods should make the corner points the hottest. For one thing, by continuity, the points slightly in from the corner will also be very hot despite only being locally exposed to its nearest neighbors.

The picture in my mind of this manifold is that it looks something like a spiral staircase near the corner. The center of this staircase might seem the hottest, but then when you “fold up the triangles” to convert the heat flow back on the manifold back to the heat flow on the triangle, every point in the triangle ultimately has many points that map to it.