This is a continuation of Research Thread II of the “Finding primes” polymath project, which is now full. It seems that we are facing particular difficulty breaching the square root barrier, in particular the following problems remain open:

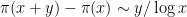

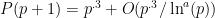

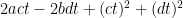

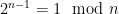

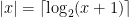

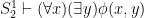

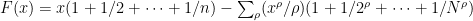

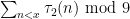

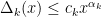

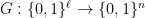

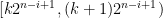

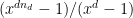

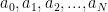

- Can we deterministically find a prime of size at least n in

time (assuming hypotheses such as RH)? Assume one has access to a factoring oracle.

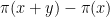

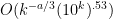

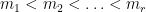

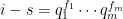

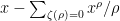

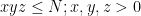

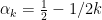

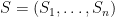

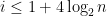

- Can we deterministically find a prime of size at least n in

time unconditionally (in particular, without RH)? Assume one has access to a factoring oracle.

We are still in the process of weighing several competing strategies to solve these and related problems. Some of these have been effectively eliminated, but we have a number of still viable strategies, which I will attempt to list below. (The list may be incomplete, and of course totally new strategies may emerge also. Please feel free to elaborate or extend the above list in the comments.)

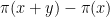

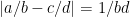

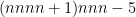

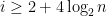

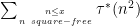

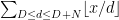

Strategy A: Find a short interval [x,x+y] such that

, where

is the number of primes less than x, by using information about the zeroes

of the Riemann zeta function.

Comment: it may help to assume a Siegel zero (or, at the other extreme, to assume RH).

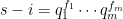

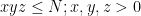

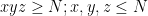

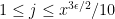

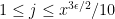

Strategy B: Assume that an interval [n,n+a] consists entirely of u-smooth numbers (i.e. no prime factors greater than u) and somehow arrive at a contradiction. (To break the square root barrier, we need

, and to stop the factoring oracle from being ridiculously overpowered, n should be subexponential size in u.)

Comment: in this scenario, we will have n/p close to an integer for many primes between and u, and n/p far from an integer for all primes larger than u.

Strategy C: Solve the following toy problem: given n and u, what is the distance to the closest integer to n which contains a factor comparable to u (e.g. in [u,2u])? [Ideally, we want a prime factor here, but even the problem of getting an integer factor is not fully understood yet.] Beating

here is analogous to breaking the square root barrier in the primes problem.

Comments:

- The trivial bound is u/2 – just move to the nearest multiple of u to n. This bound can be attained for really large n, e.g.

. But it seems we can do better for small n.

- For

, one trivially does not have to move at all.

- For

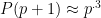

, one has an upper bound of

, by noting that having a factor comparable to u is equivalent to having a factor comparable to n/u.

- For

, one has an upper bound of

, by taking

to be the first square larger than n,

to be the closest square to

, and noting that

has a factor comparable to u and is within

of n. (This paper improves this bound to

conditional on a strong exponential sum estimate.)

- For n=poly(u), it may be possible to take a dynamical systems approach, writing n base u and incrementing or decrementing u and hope for some equidistribution. Some sort of “smart” modification of u may also be effective.

- There is a large paper by Ford devoted to this sort of question.

Strategy D. Find special sequences of integers that are known to have special types of prime factors, or are known to have unusually high densities of primes.

Comment. There are only a handful of explicitly computable sparse sequences that are known unconditionally to capture infinitely many primes.

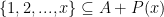

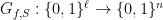

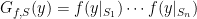

Strategy E. Find efficient deterministic algorithms for finding various types of “pseudoprimes” – numbers which obey some of the properties of being prime, e.g.

. (For this discussion, we will consider primes as a special case of pseudoprimes.)

Comment. For the specific problem of solving there is an elementary observation that if n obeys this property, then

does also, which solves this particular problem; but this does not indicate how to, for instance, have

and

obeyed simultaneously.

As always, oversight of this research thread is conducted at the discussion thread, and any references and detailed computations should be placed at the wiki.

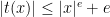

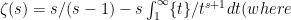

About the zeta-function strategy, one thing I have just remembered (but which may have been in the back of my mind) is that Selberg already proved in 1943 that, on the Riemann Hypothesis, one has for almost all

for almost all  , for any function

, for any function  growing, roughly, faster than

growing, roughly, faster than  where

where  .

.

The paper starts on page 160 of the first volume of Selberg’s collected works; most of it is available on Google books here.

Comment by Emmanuel Kowalski — August 13, 2009 @ 7:35 pm |

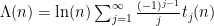

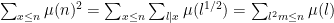

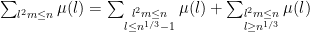

I thought I might mention some heuristics that allow one to guess results such as Selberg’s result without having to do all the formal computations. As mentioned in earlier posts, we have the explicit (though not absolutely convergent) formula

where the are relatively uninteresting terms. If one introduces a spatial uncertainty of A (where

are relatively uninteresting terms. If one introduces a spatial uncertainty of A (where  ), one can truncate the sum over zeroes to those zeroes with height O(x/A), at the cost of introducing an error of size O(A); this is basically because

), one can truncate the sum over zeroes to those zeroes with height O(x/A), at the cost of introducing an error of size O(A); this is basically because  oscillates more than once over the interval

oscillates more than once over the interval ![[x,x+A]](https://s0.wp.com/latex.php?latex=%5Bx%2Cx%2BA%5D&bg=ffffff&fg=000000&s=0&c=20201002) when

when  and thus (morally) cancels itself out when averaging over such an interval. Heuristically, this gives us something like

and thus (morally) cancels itself out when averaging over such an interval. Heuristically, this gives us something like

Assuming RH and writing , one gets heuristically

, one gets heuristically

The number of zeroes with imaginary part at most T is about , so there are about

, so there are about  zeroes in play here. Pretending the exponential sum behaves randomly (which can be justified in an

zeroes in play here. Pretending the exponential sum behaves randomly (which can be justified in an  sense by standard almost orthogonality methods), the sum is expected to be about

sense by standard almost orthogonality methods), the sum is expected to be about  on the average, and the main term dominates the error term once

on the average, and the main term dominates the error term once  . This is a logarithm better than Selberg's result; I think this comes from the fact that we are looking at sharply truncated sums

. This is a logarithm better than Selberg's result; I think this comes from the fact that we are looking at sharply truncated sums  rather than smoothed out counterparts, and the above heuristics have to be weakened to reflect this.

rather than smoothed out counterparts, and the above heuristics have to be weakened to reflect this.

The same type of analysis, by the way, gives agreement with the Poisson statistics for for

for  at the second moment level, assuming GUE. It also explains why we start getting good worst-case bounds once

at the second moment level, assuming GUE. It also explains why we start getting good worst-case bounds once  .

.

Unfortunately, average case results don't seem to be of direct use to our problem here due to the generic prime issue (or Gil's razor). If we had a way of efficiently computing or estimating this random sum for some deterministically obtainable x, we might get somewhere…

for some deterministically obtainable x, we might get somewhere…

Comment by Terence Tao — August 13, 2009 @ 8:07 pm |

I agree that as a straightforward analytic computation, this is unlikely to work. The “long shot” hope is to really a real algorithmic part of the argument — i.e., some procedure with “if … then … else ….” steps — to locate a good place to look for primes between and

and  — since these are essentially distinct from purely analytic arguments and could possibly go beyond the average/genericity issue.

— since these are essentially distinct from purely analytic arguments and could possibly go beyond the average/genericity issue.

Comment by Emmanuel Kowalski — August 13, 2009 @ 11:58 pm |

I submitted a proposal that the prime counting function is given by:

N=sum( arctan(tan(Pi*Bernoulli(2*n)*GAMMA(2*n+1))) *(2*n+1))/Pi, n=3..M), M=m/2 for m even and M =m/2-1/2 for m odd. This formula yields the exact number of primes less than m. It was derived from the Riemann hypothesis and a a solution that I submitted that was never looked at. If this formula is right, then I have solve Riemann’s hypothesis! I invite a review of my paper.

Comment by Michael Anthony — March 14, 2010 @ 12:26 pm |

Note also that the above prime counting formula is also given in log form as:

N= sum(i(n+1/2)*log( (1-i*tan(Pi*bernoulli(2*n)*gamma(2*n))/(1-i*tan(Pi*Bernoulli(2*n)*GAMMA(2*n+1))/Pi , n=3..m); which for large n reduces to the Prime Number theorem.

Comment by Michael Anthony — March 14, 2010 @ 12:32 pm |

Let me stick my neck out and follow up on the objection to my post #45. It’s true that without better control of the heuristic the argument is in trouble. However, I think that assuming

the argument is in trouble. However, I think that assuming  is a bit beyond worst-case. As long as the quantity

is a bit beyond worst-case. As long as the quantity  in the Erdos-type theorem is strictly decreasing for

in the Erdos-type theorem is strictly decreasing for  in the range

in the range  , (which I think follows from the Baker-Harmen methods) then we should be able to assume that

, (which I think follows from the Baker-Harmen methods) then we should be able to assume that  . Of course, we’d need something more quantitative than this to be useful.

. Of course, we’d need something more quantitative than this to be useful.

Let’s be very optimistic for second. If we could get down to (that is to say we could get the error in the estimate

(that is to say we could get the error in the estimate  to be slightly worse than a square-root) then I think the procedure works as stated without enlarging any of the search intervals. The reason for this is that the search space resulting from not knowing where in the interval

to be slightly worse than a square-root) then I think the procedure works as stated without enlarging any of the search intervals. The reason for this is that the search space resulting from not knowing where in the interval ![[x,x+cx^{.53}]](https://s0.wp.com/latex.php?latex=%5Bx%2Cx%2Bcx%5E%7B.53%7D%5D&bg=ffffff&fg=000000&s=0&c=20201002) the large prime (

the large prime ( ) we are looking for is, and the search space resulting from not knowing where in the interval

) we are looking for is, and the search space resulting from not knowing where in the interval ![[p^{.3},p^{.3}+p^{.3\times.53}]](https://s0.wp.com/latex.php?latex=%5Bp%5E%7B.3%7D%2Cp%5E%7B.3%7D%2Bp%5E%7B.3%5Ctimes.53%7D%5D&bg=ffffff&fg=000000&s=0&c=20201002) the small prime (

the small prime ( ) is, would entirely overlap. Of course as the error estimate worsens from

) is, would entirely overlap. Of course as the error estimate worsens from  towards

towards  the overall performance decreases towards the

the overall performance decreases towards the  .

.

However, I think it would be possible to get some mileage out of even a very weak error estimate. Say we could get (which is probably the most likely estimate we would be able to obtain, if we could get anything at all). Then I think this method should get some sort of logarithmic improvement over the prime gap results, such as moving us down to around

(which is probably the most likely estimate we would be able to obtain, if we could get anything at all). Then I think this method should get some sort of logarithmic improvement over the prime gap results, such as moving us down to around  . For large enough

. For large enough  this might be able to move us towards the ultra-weak conjecture on RH prime gap result.

this might be able to move us towards the ultra-weak conjecture on RH prime gap result.

Of course, all of this assumes we could get some sort of control on the error in the approximation , and the pay-off would most likely be very small.

, and the pay-off would most likely be very small.

Comment by Mark Lewko — August 13, 2009 @ 8:21 pm |

Admittedly, this is just saying that a favorable estimate in some other problem would translate into a favorable estimate for our problem. Of course, we are not at a shortage of “other problems” of this form.

Comment by Mark Lewko — August 13, 2009 @ 9:22 pm |

Here is a suggestion on how to combine strategy A with strategy D, following Harald Helfgott’s suggestion, to attack the problem (problem 1 above): Instead of using the Riemann zeta function, one can use the zeta function associated to the number ring

problem (problem 1 above): Instead of using the Riemann zeta function, one can use the zeta function associated to the number ring ![Z[i]](https://s0.wp.com/latex.php?latex=Z%5Bi%5D&bg=ffffff&fg=000000&s=0&c=20201002) , say, to count those primes

, say, to count those primes  that are a sum of two squares. Assuming the RH for this zeta function (and assuming that it doesn’t have appreciably more zeros than the usual zeta function — this would be need to be looked up), we should be able to say that every interval

that are a sum of two squares. Assuming the RH for this zeta function (and assuming that it doesn’t have appreciably more zeros than the usual zeta function — this would be need to be looked up), we should be able to say that every interval ![[n, n+ \sqrt{n} \log n]](https://s0.wp.com/latex.php?latex=%5Bn%2C+n%2B+%5Csqrt%7Bn%7D+%5Clog+n%5D&bg=ffffff&fg=000000&s=0&c=20201002) , say, contains the “right number” of primes that are

, say, contains the “right number” of primes that are  (and I suppose one can also use the usual Dirichlet L-functions for this task… so we might only need assume GRH, not ERH).

(and I suppose one can also use the usual Dirichlet L-functions for this task… so we might only need assume GRH, not ERH).

But now these primes are the sum of two squares, and there are only

are the sum of two squares, and there are only  numbers up to

numbers up to  that are a sum of two squares. Assuming that it is easy to “walk through” only those numbers (that are a sum of two squares) in our interval

that are a sum of two squares. Assuming that it is easy to “walk through” only those numbers (that are a sum of two squares) in our interval ![[n, x+\sqrt{n}\log n]](https://s0.wp.com/latex.php?latex=%5Bn%2C+x%2B%5Csqrt%7Bn%7D%5Clog+n%5D&bg=ffffff&fg=000000&s=0&c=20201002) , it seems to me that this shaves a factor of

, it seems to me that this shaves a factor of  off the running time to locate a prime (but maybe this can also be achieved by using a sieve on small primes for

off the running time to locate a prime (but maybe this can also be achieved by using a sieve on small primes for ![[n, n+\sqrt{n} \log n]](https://s0.wp.com/latex.php?latex=%5Bn%2C+n%2B%5Csqrt%7Bn%7D+%5Clog+n%5D&bg=ffffff&fg=000000&s=0&c=20201002) to begin with — i.e. maybe an easier method will do).

to begin with — i.e. maybe an easier method will do).

But now that was what we got just with the form . The same trick should work with any norm form, though I doubt one could get any better than a

. The same trick should work with any norm form, though I doubt one could get any better than a  factor improvement in the running time this way. Anyways, it was just a thought…

factor improvement in the running time this way. Anyways, it was just a thought…

Comment by Ernie Croot — August 13, 2009 @ 8:35 pm |

The question of walking/enumerating fast the numbers represented by , without repetition, seems quite difficult to me. The characterizations of these numbers which I know are all based on properties of the factorization of the integer (all odd prime divisors which are congruent to -1 modulo 4 must appear with even multiplicity). As a toy problem, is there a quick way to check that a number congruent to 1 modulo 4 is not divisible by two distinct primes congruent to -1 modulo 4?

, without repetition, seems quite difficult to me. The characterizations of these numbers which I know are all based on properties of the factorization of the integer (all odd prime divisors which are congruent to -1 modulo 4 must appear with even multiplicity). As a toy problem, is there a quick way to check that a number congruent to 1 modulo 4 is not divisible by two distinct primes congruent to -1 modulo 4?

Comment by Emmanuel Kowalski — August 13, 2009 @ 11:55 pm |

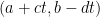

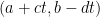

Yes, I guess you are right. There might still be something that can be salvaged, though. For example, there are formulas for the smaller of or

or  such that

such that  , for a prime

, for a prime  (though, the formulas are too unwieldy for an algorithm), and there is a dynamical systems approach due to Roger Heath-Brown that identifies the fixed point of the system with

(though, the formulas are too unwieldy for an algorithm), and there is a dynamical systems approach due to Roger Heath-Brown that identifies the fixed point of the system with  that might be useful (I doubt it). I seem to recall also that there is a standard way to find

that might be useful (I doubt it). I seem to recall also that there is a standard way to find  and

and  using continued fractions, though I can’t remember just now how that algorithm works. In any case, none of these ideas seem to work (if so, it’s probably just a reworking of the Euclidean algorithm, which is all continued fractions really are).

using continued fractions, though I can’t remember just now how that algorithm works. In any case, none of these ideas seem to work (if so, it’s probably just a reworking of the Euclidean algorithm, which is all continued fractions really are).

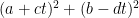

Maybe one can look at sequences of Farey fractions somehow. For example, say we want a sum of squares that comes close to ; in fact, let’s say we want a whole lot of them that we can find quickly. Maybe what would be good is to have them take the form

; in fact, let’s say we want a whole lot of them that we can find quickly. Maybe what would be good is to have them take the form  for some integer

for some integer  (this, of course, won’t cover all close sums of squares, but it is a start). Now, the difference between this and

(this, of course, won’t cover all close sums of squares, but it is a start). Now, the difference between this and  is something like

is something like  . And, of course, if we choose

. And, of course, if we choose  to be a close rational approximation to

to be a close rational approximation to  , but where, say,

, but where, say,  are much smaller than

are much smaller than  , then this difference will be small. In fact, say

, then this difference will be small. In fact, say  , with

, with  . Then, the difference between the two sums-of-squares will be

. Then, the difference between the two sums-of-squares will be  , which is quite small for

, which is quite small for  small. And then maybe one can glue together a bunch of these arithmetic progressions

small. And then maybe one can glue together a bunch of these arithmetic progressions  , so as to cover the whole interval

, so as to cover the whole interval ![[x, x + \sqrt{x}\log x]](https://s0.wp.com/latex.php?latex=%5Bx%2C+x+%2B+%5Csqrt%7Bx%7D%5Clog+x%5D&bg=ffffff&fg=000000&s=0&c=20201002) . Hmmm… I guess we still can get these APs to overlap a lot — it would be good if we could somehow pick the APs to be as disjoint as possible (I have a paper on this sort of thing — picking lots of disjoint APs — but it seems completely irrelevant to this problem).

. Hmmm… I guess we still can get these APs to overlap a lot — it would be good if we could somehow pick the APs to be as disjoint as possible (I have a paper on this sort of thing — picking lots of disjoint APs — but it seems completely irrelevant to this problem).

Ok, it's starting to look like this approach (combining A and D) won't work in the way I laid out. I think it would be interesting if there were a way to do it, though.

Comment by Ernie Croot — August 14, 2009 @ 12:42 am |

Looking again at what I wrote here, I see that I didn’t express myself clearly. Let me try again: in the first paragraph, all I am saying is that if we knew what the ‘s are such that

‘s are such that  sums to a prime in the interval under consideration, we could search only along these and avoid the problem of repeat representations.

sums to a prime in the interval under consideration, we could search only along these and avoid the problem of repeat representations.

And in the second paragraph all I am trying to say is that maybe what we can do is partition the set of numbers in our interval that are a sum of two squares, into families, where the numbers within each family are automatically distinct ( the numbers of the form are all distinct for fixed a,b,c,d where t varies). Of course, we need that the families themselves don't overlap, and that the arithmetic progressions

are all distinct for fixed a,b,c,d where t varies). Of course, we need that the families themselves don't overlap, and that the arithmetic progressions  used to define the families, also don't overlap — this is obviously the hard part.

used to define the families, also don't overlap — this is obviously the hard part.

Comment by Ernie Croot — August 14, 2009 @ 9:51 pm |

I don’t get this. Surely running through the numbers of the form x^2 + y^2 in an interval roughly of the form [n,n+sqrt(n)] is an easy task, and surely the time taken should be roughly O(sqrt(n))? This is all that is being required in this argument, it seems to me. What would be hard would be to pick a number of the form x^2+y^2 uniformly and at random (i.e., without multiplicities).

Comment by H — August 14, 2009 @ 2:34 am |

Actually, you do seem to lose the factor of O(sqrt(log N)) in running time that you intended to win by proceeding in this way. The problem is that you’ll end up producing most of the non-primes of the form x^2+y^2 many times over – once for each time the form represents the non-prime. I don’t see a way to avoid this (though obviously one needs to check for primality only once).

Comment by HH — August 14, 2009 @ 3:34 am |

I guess what I had in mind really was the enumeration problem as follows: given , find the smallest integer larger than

, find the smallest integer larger than  which is of the form

which is of the form  . How fast can this be done deterministically?

. How fast can this be done deterministically?

In some ways, the numbers , when multiplicity of representation is forbidden, are worse than the prime numbers. For instance, their associated zeta function is roughly controlled by the square root of the zeta function (of

, when multiplicity of representation is forbidden, are worse than the prime numbers. For instance, their associated zeta function is roughly controlled by the square root of the zeta function (of  ), so it has singularities even at the zeros of

), so it has singularities even at the zeros of  .

.

(In the other direction, sometimes those numbers are better; e.g., it is possible to get the asymptotic formula for their counting function using pure sieve, as done first by Iwaniec, I think — the asymptotic itself was first proved by Landau using analytic methods).

Comment by Emmanuel Kowalski — August 14, 2009 @ 3:12 pm |

This look like a very nice problem. Can it be done fast with a randomized algorithm?(without a factoring oracle?). Also, why things become easier when multiplicity of representations is not forbidden?

Comment by Gil Kalai — August 14, 2009 @ 3:22 pm |

I don’t know any randomized algorithm here (but maybe randomized algorithms are not very good for this type of problem where one asks for a very specific answer? for finding a number of this type in a longish interval, on the other hand, one could simply look randomly for a prime congruent to 1 modulo 4).

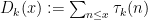

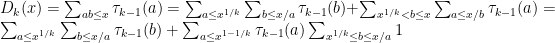

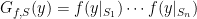

Analytically, summing any smooth enough function over integers , if multiplicity is allowed, can be written as

, if multiplicity is allowed, can be written as

and because this is a double sum with two free variables of a smooth function one can apply, for instance, Poisson summation, or many other tools.

Getting rid of the multiplicity means, in this language, inserting a term , where

, where  is the multiplicity:

is the multiplicity:

Because the function is complicated arithmetically (it depends on the prime factorization of the argument), this is not a nice smooth summation anymore.

is complicated arithmetically (it depends on the prime factorization of the argument), this is not a nice smooth summation anymore.

For instance, I don’t think there exists a proof of the counting of those numbers (the case where is the characteristic function of an interval

is the characteristic function of an interval ![[1,N]](https://s0.wp.com/latex.php?latex=%5B1%2CN%5D&bg=ffffff&fg=000000&s=0&c=20201002) ) which doesn’t use multiplicative methods (zeta functions or sieve).

) which doesn’t use multiplicative methods (zeta functions or sieve).

Comment by Emmanuel Kowalski — August 14, 2009 @ 6:06 pm |

I wonder what is known about the complexity of deciding if a number is the sum of two squares. Is it as hard as factoring? Also, what is known about the distribution of gaps between numbers which are sums of two squares.

Comment by Gil Kalai — August 15, 2009 @ 7:55 pm |

I’m not sure if this exactly answers your question, but there is a guaranteed to be number representable as the sum of two squares in the interval![[x, x+5x^{1/4}]](https://s0.wp.com/latex.php?latex=%5Bx%2C+x%2B5x%5E%7B1%2F4%7D%5D&bg=ffffff&fg=000000&s=0&c=20201002) . I don’t have a reference for a proof of this, but it is stated (and attributed to Littlewood) in connection with open problem 64 in Montgomery’s 10 Lectures on the Interface of Harmonic Analysis and Number Theory

. I don’t have a reference for a proof of this, but it is stated (and attributed to Littlewood) in connection with open problem 64 in Montgomery’s 10 Lectures on the Interface of Harmonic Analysis and Number Theory

Comment by Mark Lewko — August 15, 2009 @ 8:44 pm |

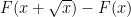

I think this fact can be established by a simple greedy algorithm argument: if one lets be the largest square less than x, then

be the largest square less than x, then  is within

is within  or so of x; if one then lets

or so of x; if one then lets  be the first square larger than

be the first square larger than  , then

, then  should be within about

should be within about  or so of

or so of  , so

, so  is a sum of two squares in

is a sum of two squares in ![[x,x+5x^{1/4}]](https://s0.wp.com/latex.php?latex=%5Bx%2Cx%2B5x%5E%7B1%2F4%7D%5D&bg=ffffff&fg=000000&s=0&c=20201002) . (A very similar argument, regarding differences of two squares rather than sums, is sketched in Comment 4 after Strategy C in the post.)

. (A very similar argument, regarding differences of two squares rather than sums, is sketched in Comment 4 after Strategy C in the post.)

It might be of interest to see if there is some way to narrow this interval; the techniques for doing so might be helpful for us.

Comment by Terence Tao — August 15, 2009 @ 8:52 pm

That is, in fact, open problem 64 (to establish this with![[x,x+o(x^{1/4})]](https://s0.wp.com/latex.php?latex=%5Bx%2Cx%2Bo%28x%5E%7B1%2F4%7D%29%5D&bg=ffffff&fg=000000&s=0&c=20201002) ).

).

Comment by Mark Lewko — August 15, 2009 @ 9:03 pm

but regardless of what can be proved, what is the “truth” regarding the density and the distribution of gaps between integers which are the sum of two squares?

Comment by Gil Kalai — August 15, 2009 @ 9:29 pm

Halberstam, in a paper in Math. Mag. 56 (1983), gives an elementary argument of Bambah and Chowla that replaces with

with  . I think this is also discussed in one of the early chapters of the book of Montgomery and Vaughan, but I don’t have this available to check. My memory is that they say that nothing better is known. (Halberstam also does, but this is 20 years earlier).

. I think this is also discussed in one of the early chapters of the book of Montgomery and Vaughan, but I don’t have this available to check. My memory is that they say that nothing better is known. (Halberstam also does, but this is 20 years earlier).

In the opposite direction, Richards (Adv. in Math. 46, 1982), proves that the gaps between consecutive sums of two squares are infinitely often

between consecutive sums of two squares are infinitely often , where

, where  is arbitrarily small.

is arbitrarily small.

at least

The average gap is of size . I don’t know if there are analogues of the conjectured Poisson distributions of the numbers of primes in intervals of logarithmic length, though that might be something fairly reasonable to expect.

. I don’t know if there are analogues of the conjectured Poisson distributions of the numbers of primes in intervals of logarithmic length, though that might be something fairly reasonable to expect.

Comment by Emmanuel Kowalski — August 15, 2009 @ 9:54 pm

The greedy strategy argument of Bambah and Chowla shows that every interval![(x-2\sqrt{2}x^{1/4},x]](https://s0.wp.com/latex.php?latex=%28x-2%5Csqrt%7B2%7Dx%5E%7B1%2F4%7D%2Cx%5D&bg=ffffff&fg=000000&s=0&c=20201002) contains a sum of two squares, for

contains a sum of two squares, for  . Nothing better is known even today (the paper of Bambah and Chowla is from around 1945). Not only Littlewood, but also Chowla, stated the improvement to

. Nothing better is known even today (the paper of Bambah and Chowla is from around 1945). Not only Littlewood, but also Chowla, stated the improvement to  as a research problem.

as a research problem.

Comment by Anon — August 16, 2009 @ 4:59 am

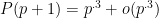

There is another old construction of pseudoprimes due to Cipolla (1904). Let be the m-th Fermat number. Note that

be the m-th Fermat number. Note that  satisfies

satisfies  . Now take any sequence

. Now take any sequence  with

with  . Then

. Then  is a pseudoprime if and only if

is a pseudoprime if and only if  .

.

Unfortunately, it seems that it doesn't give a denser set of pseudoprimes. One can see this by approximating by

by  and by taking logs. And of course, we would like to construct pseudoprimes with as few prime factors as possible.

and by taking logs. And of course, we would like to construct pseudoprimes with as few prime factors as possible.

Comment by François Brunault — August 13, 2009 @ 11:27 pm |

If a number is passes the Fermat primality test but is not prime it is called a Carmichael number. The number 1729 which is also the smallest number which is the sum of two different cubes in two different ways is a Carmichael number. There are an infinite number of them of them and there are at least n^2/7 between 1 and n.

if each factor of (6k+1)(12k+1)(18k+1) is prime then their product is a Carmichael number. More on these numbers are at

http://en.wikipedia.org/wiki/Carmichael_number

One way to find x simultaneously satisfying 2^x-1 = 0 mod x and 3^x-1 = 0 mod x that is not prime would be to try to find a triple of primes 6k+1, 12k+1, 18k+1 as above. If the primes where randomly distributed with density 1/logx then about 1/(log x)^3 searches ought to do it so possibly that would be an algorithm that would find one such k digit number in an polynomial of k time if the AKS test were used to test primality.

Comment by Kristal Cantwell — August 13, 2009 @ 11:39 pm |

Of course the smallest Carmichael number is 561 so the search for k-digit numbers would have to start with 3.

Comment by Kristal Cantwell — August 14, 2009 @ 4:40 pm |

This and related ideas are also discussed in the following paper.

A. Granville and C. Pomerance, Two contradictory conjectures concerning Carmichael numbers, Math. Comp., 71 (2001), 883-908.

Granville and Pomerance list essentially all known and conjectured density estimates related to this, as well as some experimental evidence.

Comment by François Dorais — August 14, 2009 @ 6:08 pm |

I have a url for this:

Click to access paper125.pdf

Comment by Kristal Cantwell — August 14, 2009 @ 6:19 pm |

[…] https://polymathprojects.org/2009/08/13/research-thread-iii-determinstic-way-to-find-primes/ Possibly related posts: (automatically generated)Thread on BloggingProposed Polymath3 […]

Pingback by Polymath4 « Euclidean Ramsey Theory — August 13, 2009 @ 11:56 pm |

I started thinking whether proof theoretic methods could shed some new light on this problem. I was originally aiming for negative results, which is where proof theory usually excels, but while doing some preliminary investigations, I found the following wonderful paper:

J. B. Paris, A. J. Wilkie and A. R. Woods, Provability of the pigeonhole principle and the existence of infinitely many primes, J. Symbolic Logic 53 (1988), 1235-1244.

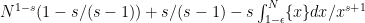

In this paper, the authors show that the infinitude of primes is provable in A standard formulation of his system consists of the usual defining equations for

A standard formulation of his system consists of the usual defining equations for  as well as the functions

as well as the functions  and

and  (this is the

(this is the  part) together with induction for bounded formulas (this is the

part) together with induction for bounded formulas (this is the  part).

part).

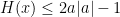

Their proof is rather clever. The authors show that if there is no prime in![{[a,a^{11}]}](https://s0.wp.com/latex.php?latex=%7B%5Ba%2Ca%5E%7B11%7D%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) then there is a bounded formula

then there is a bounded formula  that describes the graph of an injection from

that describes the graph of an injection from ![{[0,9a|a|]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2C9a%7Ca%7C%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) to

to ![{[0,8a|a|]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2C8a%7Ca%7C%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) , thereby violating a very weak form of the Pigeon Hole Principle (PHP). Before then, they show that for every standard positive integer

, thereby violating a very weak form of the Pigeon Hole Principle (PHP). Before then, they show that for every standard positive integer  and every bounded formula

and every bounded formula  , it is provable in

, it is provable in  that: for every

that: for every  the formula

the formula  does not describe the graph of an injection from

does not describe the graph of an injection from ![{[0,(n+1)z]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2C%28n%2B1%29z%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) to

to ![{[0,nz]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2Cnz%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

By standard witnessing theorems of bounded arithmetic, this means that there is a function such that is always prime and

is always prime and  is polynomial time computable using an oracle somewhere in the polynomial hierarchy. In fact, I gather that the following paper proves a suitable form of PHP in the weaker system

is polynomial time computable using an oracle somewhere in the polynomial hierarchy. In fact, I gather that the following paper proves a suitable form of PHP in the weaker system  .

.

A. Maciel, T. Pitassi and T., A. R. Woods, A new proof of the weak pigeonhole principle, J. Comput. System Sci. 64 (2002), 843-872.

Improving this to [or

[or  , or

, or  ] would give a polytime algorithm for producing large primes [modulo polynomial local search, modulo a NP oracle]. There are a lot of follow up literature relating weak PHP and infinitude of primes to other common problems in complexity theory and proof theory, but I haven’t had a chance to survey many of them.

] would give a polytime algorithm for producing large primes [modulo polynomial local search, modulo a NP oracle]. There are a lot of follow up literature relating weak PHP and infinitude of primes to other common problems in complexity theory and proof theory, but I haven’t had a chance to survey many of them.

On the other hand, a proof that does not prove the infinitude of primes would also be interesting. This would not imply that there is no polynomial time algorithm for producing large primes, but it would show that the proof of correctness of such an algorithm would require stronger axioms. Possibly Peano arithmetic would suffice to prove correctness, but the proof may require second-order axioms (e.g. deep facts about zeros of

does not prove the infinitude of primes would also be interesting. This would not imply that there is no polynomial time algorithm for producing large primes, but it would show that the proof of correctness of such an algorithm would require stronger axioms. Possibly Peano arithmetic would suffice to prove correctness, but the proof may require second-order axioms (e.g. deep facts about zeros of  ) or, albeit unlikely, much worse (e.g. ZFC + large cardinals)!

) or, albeit unlikely, much worse (e.g. ZFC + large cardinals)!

Comment by François Dorais — August 14, 2009 @ 5:11 am |

Well, the NP-oracle algorithm is obvious: once we know that![{[x,t(x)]}](https://s0.wp.com/latex.php?latex=%7B%5Bx%2Ct%28x%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) contains a prime for some term

contains a prime for some term  of the language, we can repeatedly bisect this interval and query the oracle to see which side contains a prime. Since for every term

of the language, we can repeatedly bisect this interval and query the oracle to see which side contains a prime. Since for every term  there is a standard

there is a standard  such that

such that  , this homes in on a prime in polynomial time. So I would conjecture that some weak Bertrand’s Postulate is provable in

, this homes in on a prime in polynomial time. So I would conjecture that some weak Bertrand’s Postulate is provable in  , namely that there is a term

, namely that there is a term  such that

such that  proves that there is always a prime in the interval

proves that there is always a prime in the interval ![{[x,t(x)]}](https://s0.wp.com/latex.php?latex=%7B%5Bx%2Ct%28x%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) . This term could be much larger than

. This term could be much larger than  , leaving room for weaker forms of PHP or some completely different idea…

, leaving room for weaker forms of PHP or some completely different idea…

The PLS possibility is intriguing. The dynamical system attack suggested by Tao may be on the right track (though we may want to increase the number of neighbors a bit). Note that the PLS algorithm doesn’t need to produce the prime itself, only some datum that can be decoded into a suitable prime in polytime, so that gives a bit more room to set up good cost functions. I don’t have definite thoughts, but this looks plausible.

I’m afraid I still have no intuition for the polytime possibility…

Comment by François Dorais — August 14, 2009 @ 6:51 am |

To bring this down to Earth a little, the weak pigeonhole principles mentioned above are related to the existence of collision-free hash functions (in the weakest of the two senses). Suppose (which is allowed to depend on

(which is allowed to depend on  too) is a simple enough bounded formula which always defines the graph of a function from

too) is a simple enough bounded formula which always defines the graph of a function from ![{[0,t(z)]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2Ct%28z%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) to

to ![{[0,z]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2Cz%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) for some term

for some term  such that

such that  for all

for all  . Then, finding a

. Then, finding a  proof that, for all

proof that, for all  ,

,  does not represent the graph of an injection from

does not represent the graph of an injection from ![[0,t(z)]](https://s0.wp.com/latex.php?latex=%5B0%2Ct%28z%29%5D&bg=ffffff&fg=000000&s=0&c=20201002) to

to ![{[0,z]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2Cz%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) is essentially equivalent to finding a polynomial time algorithm that always produces a collision for

is essentially equivalent to finding a polynomial time algorithm that always produces a collision for  , i.e., two numbers in

, i.e., two numbers in ![{[0,t(z)]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2Ct%28z%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) that map to the same value via

that map to the same value via  , at least for all standard numbers

, at least for all standard numbers  .

.

A common bottleneck in the work relating PHP and primes is the difficulty of factoring, so I will provisionally assume that I have a factoring oracle. In this case, there is a simple bounded formula as above such that either there are polynomial time constructable primes in all sufficiently long intervals, or

as above such that either there are polynomial time constructable primes in all sufficiently long intervals, or  describes the graph of a collision-free hash function. (Warning: I’ve only superficially checked the details of this argument, so I may have missed a sneaky inadmissible use of induction somewhere.) Because of the nature of this result, I suspect that similar (possibly better) results already exist in descriptive complexity. Perhaps this rings a bell to some readers?

describes the graph of a collision-free hash function. (Warning: I’ve only superficially checked the details of this argument, so I may have missed a sneaky inadmissible use of induction somewhere.) Because of the nature of this result, I suspect that similar (possibly better) results already exist in descriptive complexity. Perhaps this rings a bell to some readers?

A little side remark about factoring – it is probably not a mere coincidence that the main part of the most efficient factoring algorithms we know consist in finding a collision for some function…

Comment by François Dorais — August 14, 2009 @ 7:56 pm |

Below is the key result of Woods in its general form, which goes through in + Factoring. The most subtle point is to show in

+ Factoring. The most subtle point is to show in  that every prime that divides a number must occur in the prime factorization of that number.

that every prime that divides a number must occur in the prime factorization of that number.

The description of the function in the proof is

in the proof is  modulo the factoring oracle, so the witnessing theorem for

modulo the factoring oracle, so the witnessing theorem for  applies and this can be used to relate collision-free hashing functions with long intervals without polynomial time constructable primes (modulo factoring).

applies and this can be used to relate collision-free hashing functions with long intervals without polynomial time constructable primes (modulo factoring).

As usual , and when a

, and when a  is factorization it is always assumed that

is factorization it is always assumed that

, and that the factorization is coded in a manner which is easily decodable in

, and that the factorization is coded in a manner which is easily decodable in  .

.

Theorem (Woods). If every element of the interval $[b+1,b+a]$ is $a$-smooth, then there is an injection from ${[0,a|b|-1]}$ into ${[0,(1+\lfloor a/2 \rfloor)|b|+2a|a|-1]}.$

I give the construction in some detail since this is slightly more general than the proof in PWW and I wanted to convince myself that this does indeed work in + Factoring. However, the tedious verifications are left to the reader.

+ Factoring. However, the tedious verifications are left to the reader.

Sketch of proof. For every![x \in [0,a|b|-1]](https://s0.wp.com/latex.php?latex=x+%5Cin+%5B0%2Ca%7Cb%7C-1%5D&bg=ffffff&fg=000000&s=0&c=20201002) there is a unique triple

there is a unique triple  such that

such that

and, factoring , we have

, we have  ,

,  , and

, and

where

So and

and  .

.

The function will be linear on each interval described a triple

will be linear on each interval described a triple  as above. There are two main cases depending on whether (*) there is a

as above. There are two main cases depending on whether (*) there is a  such that there are no multiples of

such that there are no multiples of  in

in ![{[b+1,b+a]}](https://s0.wp.com/latex.php?latex=%7B%5Bb%2B1%2Cb%2Ba%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) and there are no multiples of

and there are no multiples of  in

in ![{[b+1,b+i-1]}](https://s0.wp.com/latex.php?latex=%7B%5Bb%2B1%2Cb%2Bi-1%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

Case (*) holds. Let be the first element of

be the first element of ![{[1,n]}](https://s0.wp.com/latex.php?latex=%7B%5B1%2Cn%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) that satisfies (*). Then define

that satisfies (*). Then define

In other words, maps the interval

maps the interval ![{[(i-1)|b|,i|b|-1]}](https://s0.wp.com/latex.php?latex=%7B%5B%28i-1%29%7Cb%7C%2Ci%7Cb%7C-1%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) onto the interval of length

onto the interval of length  starting with

starting with

Since , we have

, we have

Case (*) fails. Then either (1) is not the first multiple of

is not the first multiple of  in

in ![{[b+1,b+a]}](https://s0.wp.com/latex.php?latex=%7B%5Bb%2B1%2Cb%2Ba%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) , or (2)

, or (2)  is the first multiple of

is the first multiple of  in

in ![{[b+1,b+a]}](https://s0.wp.com/latex.php?latex=%7B%5Bb%2B1%2Cb%2Ba%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) but there is a multiple of

but there is a multiple of  in

in ![{[b+1,b+a]}](https://s0.wp.com/latex.php?latex=%7B%5Bb%2B1%2Cb%2Ba%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

Ad (1). Let be the first multiple of

be the first multiple of  in

in ![{[b+1,b+a]}](https://s0.wp.com/latex.php?latex=%7B%5Bb%2B1%2Cb%2Ba%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) (so

(so  ). Note that

). Note that  divides

divides  . Factor

. Factor  and say

and say  . Then set

. Then set

where is as above and similarly

is as above and similarly

In other words, maps the interval

maps the interval

onto the interval

which both have length

Ad (2). Let be the first multiple of

be the first multiple of  in

in ![{[b+1,b+a]}](https://s0.wp.com/latex.php?latex=%7B%5Bb%2B1%2Cb%2Ba%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) (so

(so  ). Note that

). Note that  divides

divides  . Factor

. Factor  and say

and say  . Then set

. Then set

where and

and  are as above and

are as above and

In other words, maps the interval

maps the interval

onto the interval

which both have length

Note that in both subcases (1) and (2), we have .

.

Comment by François Dorais — August 15, 2009 @ 2:15 am |

I am trying to understand this construction. What happens if two different triples and

and  with

with  satisfy condition (*)? Is there an argument that guarantees that the two corresponding

satisfy condition (*)? Is there an argument that guarantees that the two corresponding  are different? Otherwise I do not see why the mapping is injective. I’m sorry if it’s a dumb question.

are different? Otherwise I do not see why the mapping is injective. I’m sorry if it’s a dumb question.

Comment by Anonymous — August 15, 2009 @ 4:15 pm |

The verifications are indeed tedious! There are a two cases: If the exponent of is the same (say

is the same (say  ) in

) in  and

and  then they cannot both be the first multiple of

then they cannot both be the first multiple of  in

in ![{[b+1,b+a]}](https://s0.wp.com/latex.php?latex=%7B%5Bb%2B1%2Cb%2Ba%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) as required by the second half of (*). If the exponents are different then whichever has the smallest exponent fails the first half of (*).

as required by the second half of (*). If the exponents are different then whichever has the smallest exponent fails the first half of (*).

Comment by François Dorais — August 15, 2009 @ 6:47 pm |

The main reason for analyzing the question in is the following result of Sam Buss.

is the following result of Sam Buss.

Witnessing Theorem. Let be a

be a  formula. If

formula. If  then there is a polynomial time computable function

then there is a polynomial time computable function  such that

such that  .

.

If is equivalent to ‘

is equivalent to ‘ and

and  is prime’ then from a proof of the infinitude of primes in

is prime’ then from a proof of the infinitude of primes in  we could automatically extract a polytime algorithm for producing large primes. On the other hand, if the infinitude of primes is not provable in

we could automatically extract a polytime algorithm for producing large primes. On the other hand, if the infinitude of primes is not provable in  then we would not necessarily conclude that there is no polytime algorithm for producing large primes, but the correctness of this algorithm would not be formalizable in

then we would not necessarily conclude that there is no polytime algorithm for producing large primes, but the correctness of this algorithm would not be formalizable in  . (Basically, the proof would require much more powerful tools than elementary arithmetic.)

. (Basically, the proof would require much more powerful tools than elementary arithmetic.)

There is a little snag here: the usual formula for ‘ is prime’, namely

is prime’, namely

is instead of

instead of  . Of course, this is not a problem with a factoring oracle, but this is a nagging assumption. The AKS algorithm does provide a

. Of course, this is not a problem with a factoring oracle, but this is a nagging assumption. The AKS algorithm does provide a  formula to express ‘

formula to express ‘ is prime’. The snag is that the correctness of the AKS algorithm may not be provable in

is prime’. The snag is that the correctness of the AKS algorithm may not be provable in  , i.e., it is conceivable that a model of

, i.e., it is conceivable that a model of  may contain an infinitude of “AKS pseudoprimes” but only a bounded number of actual primes. While the extracted algorithm for producing “AKS pseudoprimes” would still produce actual primes in the real world, this situation would be rather awkward.

may contain an infinitude of “AKS pseudoprimes” but only a bounded number of actual primes. While the extracted algorithm for producing “AKS pseudoprimes” would still produce actual primes in the real world, this situation would be rather awkward.

The problem with the correctness of AKS in is proving the existence of a suitable parameter

is proving the existence of a suitable parameter  (as in Lemma 3 of Terry Tao’s post on AKS). The simple proof requires finding a small prime

(as in Lemma 3 of Terry Tao’s post on AKS). The simple proof requires finding a small prime  that does not divide

that does not divide  for

for  . So this seems to require the infinitude of prime lengths, i.e., a proof of

. So this seems to require the infinitude of prime lengths, i.e., a proof of

(Note that the quantifiers in are sharply bounded.) This looks a lot easier to tackle than the infinitude of primes, but it is still not that easy. Indeed, this is a lot like trying to prove the infinitude of primes in

are sharply bounded.) This looks a lot easier to tackle than the infinitude of primes, but it is still not that easy. Indeed, this is a lot like trying to prove the infinitude of primes in  , which is an open problem. I don’t know how to do it, so let me pose it as a problem.

, which is an open problem. I don’t know how to do it, so let me pose it as a problem.

Problem: Prove the infinitude of prime lengths in .

.

A negative answer would be fine, but be aware that negative answer would also solve negatively the question of the infinitude of primes .

.

This difficulty suggests the possibility of working in instead. This system is just like

instead. This system is just like  except that it has a new basic function symbol

except that it has a new basic function symbol  . (Yes, there is a whole hierarchy of sharp functions, each of which has subexponential growth.) In

. (Yes, there is a whole hierarchy of sharp functions, each of which has subexponential growth.) In  the lengths are closed under the sharp operation and the PWW proof in

the lengths are closed under the sharp operation and the PWW proof in  can be reproduced for lengths. I’ve heard it claimed that the Witnessing Theorem above generalizes to

can be reproduced for lengths. I’ve heard it claimed that the Witnessing Theorem above generalizes to  with “polynomial” replaced by “ever-so-slightly-superpolynomial” (i.e., bounded by

with “polynomial” replaced by “ever-so-slightly-superpolynomial” (i.e., bounded by  where

where  is a term of

is a term of  ), which is likely true but I never saw a proof…

), which is likely true but I never saw a proof…

Comment by François Dorais — August 15, 2009 @ 10:40 pm |

Here’s an idea for the problem. Given a length use a number

use a number  of length

of length  to do a sieve of Eratosthenes. Place a 1 bit in the

to do a sieve of Eratosthenes. Place a 1 bit in the  -th digit of

-th digit of  iff

iff  is a multiple of some

is a multiple of some  . Show by induction on

. Show by induction on  (or PIND on

(or PIND on  ) that

) that  has at least one 0 bit beyond the

has at least one 0 bit beyond the  -th. Anyway, this doesn’t immediately give the right parameter

-th. Anyway, this doesn’t immediately give the right parameter  , but a similar trick might work…

, but a similar trick might work…

Comment by François Dorais — August 16, 2009 @ 12:17 am |

The trick above allows us to count just about anything that only involves only length-sized numbers. So basic sieve methods should go through and give lower bounds on the density of length primes. This is enough to pick a suitable parameter (which doesn’t have to be optimal since any

(which doesn’t have to be optimal since any  would be just fine for our purposes).

would be just fine for our purposes).

Propositions 4 and 5 (again referring to Terry Tao’s presentation of AKS) are more problematic since is too big to count. Proving that the various products in the proof of proposition 4 are distinct is fine since the polynomials all have length-size degree. The little pigeonhole trick at the start of the proof proposition 5 is fine since the exponents

is too big to count. Proving that the various products in the proof of proposition 4 are distinct is fine since the polynomials all have length-size degree. The little pigeonhole trick at the start of the proof proposition 5 is fine since the exponents  are all length-size. The final part of the proof of proposition 5 is problematic.

are all length-size. The final part of the proof of proposition 5 is problematic.

It seems that this boils down to another pigeonhole problem. This one is a little different since formulating it as an injection from![{[0,2^t]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2C2%5Et%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) to

to ![{[0,n^{\sqrt{t}}]}](https://s0.wp.com/latex.php?latex=%7B%5B0%2Cn%5E%7B%5Csqrt%7Bt%7D%7D%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) seems to require solving discrete logarithm problem! Maybe there’s a better way to think about this?

seems to require solving discrete logarithm problem! Maybe there’s a better way to think about this?

Comment by François Dorais — August 16, 2009 @ 3:35 am |

I think it’s time to recap some and draw some preliminary conclusions.

Here is a quick guide for those who are unfamiliar with bounded arithmetic: The system is the essentially the minimal system of arithmetic that can formalize all polynomial time algorithms. The system

is the essentially the minimal system of arithmetic that can formalize all polynomial time algorithms. The system  is marginally stronger and allows some simple search algorithms (usually optimization algorithms) to be formalized too. So “provable in

is marginally stronger and allows some simple search algorithms (usually optimization algorithms) to be formalized too. So “provable in  ” can be read as provable using exclusively polynomial-time methods. Similarly, “provable in

” can be read as provable using exclusively polynomial-time methods. Similarly, “provable in  ” can be read as provable using polynomial-time methods and some simple optimization methods, but nothing else.

” can be read as provable using polynomial-time methods and some simple optimization methods, but nothing else.

More precisely, here are the two basic facts which stem from the witnessing theorems of bounded arithmetic:

(1) From a proof of the infinitude of AKS-primes in one can automatically extract a polynomial time algorithm for producing primes (in the real world).

one can automatically extract a polynomial time algorithm for producing primes (in the real world).

(2) From a proof of the infinitude of AKS-primes in one can automatically extract a polynomial local search (PLS) algorithm for producing primes (in the real world).

one can automatically extract a polynomial local search (PLS) algorithm for producing primes (in the real world).

Since the AKS theorem does not appear to be formalizable in , I use “AKS-prime” for numbers that pass the AKS test and “prime” for numbers without proper divisors. (Of course, there is no such distinction in the real world.) A PLS algorithm wouldn’t be as good as a polytime algorithm, but such an algorithm would show that finding primes is either not NP-hard or NP = co-NP. See also the recent work of Beckmann and Buss [http://www.math.ucsd.edu/~sbuss/ResearchWeb/piPLS/] which allows to draw similar conclusions from proofs in

, I use “AKS-prime” for numbers that pass the AKS test and “prime” for numbers without proper divisors. (Of course, there is no such distinction in the real world.) A PLS algorithm wouldn’t be as good as a polytime algorithm, but such an algorithm would show that finding primes is either not NP-hard or NP = co-NP. See also the recent work of Beckmann and Buss [http://www.math.ucsd.edu/~sbuss/ResearchWeb/piPLS/] which allows to draw similar conclusions from proofs in  for

for  .

.

The line of thought using a factoring oracle and relating finding primes with some pigeonhole principles has some sketchy aspects and I can’t draw anything very useful from it at this time. Basically, while the factoring oracle may be inoffensive, I have a hard time convincing myself of that. So I can only draw conclusions from a proof of polytime factorization, which is even more unlikely than polytime factorization.

proof of polytime factorization, which is even more unlikely than polytime factorization.

Now for some preliminary conclusions, which are only heuristic but are worth keeping in mind.

While I initially thought that the non-provability of the AKS theorem in would be a negative, I now realize that it could be a blessing. Indeed, it suggests that AKS-like congruences may be more amenable to polynomial time attacks than pure divisibility (e.g. attacks using sieves and prime density). So Avi Wigderson’s proposal (Research Thread II, comment #18) may deserve more attention than it has been getting thus far. Moreover, result (2) suggests first trying something with an optimization flavor to it.

would be a negative, I now realize that it could be a blessing. Indeed, it suggests that AKS-like congruences may be more amenable to polynomial time attacks than pure divisibility (e.g. attacks using sieves and prime density). So Avi Wigderson’s proposal (Research Thread II, comment #18) may deserve more attention than it has been getting thus far. Moreover, result (2) suggests first trying something with an optimization flavor to it.

While density methods and variations based on divisibility may be somewhat limited for polynomial time attacks, this does not mean that they should be neglected. Indeed, analytic number theory provides a wealth of second-order information about this which may help evade these limitations. Perhaps a hybrid solution of the following type: try solving the AKS congruences using this method; if that fails, then there must be an unusually large number of primes in this small set.

Comment by François Dorais — August 16, 2009 @ 6:21 pm |

Here is the simple formula you are looking for:

arctan(tan(Pi*bernoulli(2*n)*GAMMA(2*n+1)))*(2*n+1)/Pi= 1 for Prime or zero for non-prime!

In log form:

i*(n+1/2)*log((1-tan(Pi*bernoulli(2*n)*GAMMA(2*n+1)))*(2*n+1)/Pi)/(1+tan(Pi*bernoulli(2*n)*GAMMA(2*n+1)))*(2*n+1)/Pi) )= 1 for Prime or zero for non-prime!

Comment by Michael Anthony — March 14, 2010 @ 1:10 pm |

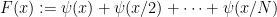

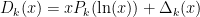

Here’s another suggestion, just using the usual Riemann zeta function: our explicit formula for is

is  . Now maybe we can work with a small set of values

. Now maybe we can work with a small set of values  , specifically chosen to nullify the effect of a large proportion of the zeros up to height, say,

, specifically chosen to nullify the effect of a large proportion of the zeros up to height, say,  or so. How? Well here is a first attempt: consider

or so. How? Well here is a first attempt: consider  (and of course we will want to show, say, that

(and of course we will want to show, say, that  is “large”, so that one of the intervals

is “large”, so that one of the intervals ![[x/n, (x+\sqrt{x})/n]](https://s0.wp.com/latex.php?latex=%5Bx%2Fn%2C+%28x%2B%5Csqrt%7Bx%7D%29%2Fn%5D&bg=ffffff&fg=000000&s=0&c=20201002) has loads of primes). Applying the explicit formula, we find that

has loads of primes). Applying the explicit formula, we find that  . Notice here that

. Notice here that  is a fragment of the Riemann zeta function, and its closeness to $\latex \zeta(s)$ can be determined through the usual integration-by-parts analytic continuation

is a fragment of the Riemann zeta function, and its closeness to $\latex \zeta(s)$ can be determined through the usual integration-by-parts analytic continuation  \{t\}$ is the fractional part of

\{t\}$ is the fractional part of  ) for

) for  . But we know that

. But we know that  , so I think this says that our Dirichlet polynomials should all be close to

, so I think this says that our Dirichlet polynomials should all be close to  (I would need to check my calculations) when evaluated at these roots

(I would need to check my calculations) when evaluated at these roots  . If this is correct, then we get a formula something like

. If this is correct, then we get a formula something like  , for some error

, for some error  . The fact that we get essentially a

. The fact that we get essentially a  in the denominator is good news, assuming I haven’t made an error.

in the denominator is good news, assuming I haven’t made an error.

Assuming this is all correct, the skeptic in me says that when the dust has settled we get no gain whatsoever.

Comment by Ernie Croot — August 14, 2009 @ 4:11 pm |

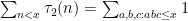

Cramér’s conjecture would permit one to find a prime by testing all the integers in a single very short interval. Does the problem get easier by allowing to test the integers in a collection of widely scattered very short intervals? The question is inspired by the observation that Chebyshev’s method allows us to count weighted prime powers in the set

with extreme precision, in fact

What I am suggesting is, maybe the explicit formula approach can be made more powerful with the extra flexibility of searching in several very short intervals. If they are not too many, but are very short, the additional work will not be sgnificant.

Comment by Anon — August 14, 2009 @ 5:21 pm |

I forgot to say that what I am suggesting is a slight elaboration on Ernie’s strategy, to consider something like

$\psi(x + h(x)) – \psi(x) + \psi(c_2x + h_2(x) – \psi(c_2x) + \cdots + \psi(c_m + h_m(x)) – \psi(c_mx)$

with suitable $c_2,\ldots,c_m$ and $h(x),h_1(x),\ldots,h_m(x)$ to knock out terms in the explicit formula. Here $m$ could be as large as a power of $\log(x)$.

Comment by Anon — August 14, 2009 @ 5:40 pm |

That *may* work, but I would think you will need the to be quite large (

to be quite large ( or so — which is what we are aiming to slightly improve after all), but I doubt you could knock out lots of terms with

or so — which is what we are aiming to slightly improve after all), but I doubt you could knock out lots of terms with  only of size a power of log — with only a power of log number of terms I would think you would start losing control over the corresponding terms in the explicit formula for zeros a little bigger than

only of size a power of log — with only a power of log number of terms I would think you would start losing control over the corresponding terms in the explicit formula for zeros a little bigger than  itself (which is a power of log).

itself (which is a power of log).

A serious problem with this sort of approach is that you don’t know ahead of time where the zeros of zeta are located on the half-line (assuming RH), and so you are sort of forced to choose the ‘s and the

‘s and the  ‘s to conform to some short piece of the zeta function. Well, there may be other nice choices out there (like terms of zeta smoothed in some way, or maybe terms coming from a short Euler product, or maybe a short segment of a completely different zeta function with which you play the usual zero-repulsion games), but if so then I would think you don’t get anything much better.

‘s to conform to some short piece of the zeta function. Well, there may be other nice choices out there (like terms of zeta smoothed in some way, or maybe terms coming from a short Euler product, or maybe a short segment of a completely different zeta function with which you play the usual zero-repulsion games), but if so then I would think you don’t get anything much better.

It just occurred to me that maybe one can modify an idea of Jean Louis Nicolas (on a completely unrelated sort of problem). I’ll think about it…

Comment by Ernie Croot — August 14, 2009 @ 7:21 pm |

I just realized I think there is a factor missing in my calculations. Probably this means that one would need to do some smoothing (of the Dirichlet polynomial) for there to be any chance of finding primes more quickly by this method. At any rate, I don’t think these methods have a chance of breaking the square-root barrier.

missing in my calculations. Probably this means that one would need to do some smoothing (of the Dirichlet polynomial) for there to be any chance of finding primes more quickly by this method. At any rate, I don’t think these methods have a chance of breaking the square-root barrier.

Here is the calculation in detail: for arbitrarily small we have

we have ![\sum_{n = 1}^N 1/n^s = \int_{1-\epsilon}^N d [x]/x^s = [x]/x^s |_{1-\epsilon}^N + s \int_{1-\epsilon}^N [x] dx/x^{s+1}](https://s0.wp.com/latex.php?latex=%5Csum_%7Bn+%3D+1%7D%5EN+1%2Fn%5Es+%3D+%5Cint_%7B1-%5Cepsilon%7D%5EN+d+%5Bx%5D%2Fx%5Es+%3D++%5Bx%5D%2Fx%5Es++%7C_%7B1-%5Cepsilon%7D%5EN+%2B+s+%5Cint_%7B1-%5Cepsilon%7D%5EN+%5Bx%5D+dx%2Fx%5E%7Bs%2B1%7D&bg=ffffff&fg=000000&s=0&c=20201002) . Here,

. Here, ![[x] = x - \{x\}](https://s0.wp.com/latex.php?latex=%5Bx%5D+%3D+x+-+%5C%7Bx%5C%7D&bg=ffffff&fg=000000&s=0&c=20201002) , where

, where  denotes the fractional part of

denotes the fractional part of  . Rewriting the

. Rewriting the ![[x]](https://s0.wp.com/latex.php?latex=%5Bx%5D&bg=ffffff&fg=000000&s=0&c=20201002) in the integral as

in the integral as  , we get that the Dirichlet polynomial is

, we get that the Dirichlet polynomial is  . Now, these last two terms here are approximately

. Now, these last two terms here are approximately  for

for  and

and  large, because

large, because  , making the integral absolutely convergent — of course we are using here the formula

, making the integral absolutely convergent — of course we are using here the formula  . If we have

. If we have  , then, we get that our Dirichlet polynomial is approximately

, then, we get that our Dirichlet polynomial is approximately  .

.

Comment by Ernie Croot — August 15, 2009 @ 6:05 pm |

Please correct typo in Gamma…Gamma(2*n)>> Gamma(2*n+1), In my paper primes and the Riemann Hypothesis, I show that the primes obey, the relation

frac(theta)=arctan(tan(theta)). You see the primes obey this rule for

theta = Pi*bernoulli(2*n)*Gamma(2*n+1), only if 2*n+1 is a prime. The function theta is a consequence of the Riemann Hypothesis: s=1/2+i*tan(theta)/2.

In fact, I show that:

Zeta(s) = sum(-2^nu*bernoulli(nu)*GAMMA(1-s)/(factorial(nu)*GAMMA(-s-nu+2)), nu = 0 .. infinity); and that when the function vanishes, s/(1-s) = (1/2+(1/2*I)*tan(theta))/(1/2-(1/2*I)*tan(theta))= exp(theta) iff RH is true. The connection with primes is obvious, since when s=-2*n and 2*n+1 is a prime, the function takes an exponential form, and von-Staudt Clausen makes it clear the primes can be counted.

Comment by Michael Anthony — March 14, 2010 @ 12:50 pm |

Here is an elementary observation, which replaces the problem of finding an integer with a factor in a range with that of finding an integer without a factor in a range.

Suppose one is looking for a prime larger than u. Observe that if a number does not have any prime factor larger than u, then it must have a factor in the interval

does not have any prime factor larger than u, then it must have a factor in the interval ![[u,u^2]](https://s0.wp.com/latex.php?latex=%5Bu%2Cu%5E2%5D&bg=ffffff&fg=000000&s=0&c=20201002) , by multiplying the prime factors of n (which are all at most u) one at a time until one falls into this interval. In fact, given any number

, by multiplying the prime factors of n (which are all at most u) one at a time until one falls into this interval. In fact, given any number  , one must have a factor in the interval

, one must have a factor in the interval ![[m,mu]](https://s0.wp.com/latex.php?latex=%5Bm%2Cmu%5D&bg=ffffff&fg=000000&s=0&c=20201002) by the same reasoning.

by the same reasoning.

So, to find a prime larger than u in at most a steps, one just needs to find an interval![[n,n+a]](https://s0.wp.com/latex.php?latex=%5Bn%2Cn%2Ba%5D&bg=ffffff&fg=000000&s=0&c=20201002) and an interval

and an interval ![[m,mu]](https://s0.wp.com/latex.php?latex=%5Bm%2Cmu%5D&bg=ffffff&fg=000000&s=0&c=20201002) with

with  such that the multiples of all the integers in

such that the multiples of all the integers in ![[m,mu]](https://s0.wp.com/latex.php?latex=%5Bm%2Cmu%5D&bg=ffffff&fg=000000&s=0&c=20201002) do not fully cover

do not fully cover ![[n,n+a]](https://s0.wp.com/latex.php?latex=%5Bn%2Cn%2Ba%5D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

This seems beyond the reach of sieve theory, but at least we no longer have the word “prime” in the problem…

Comment by Terence Tao — August 15, 2009 @ 3:39 am |

Here is a variant of the above idea.

Suppose we are given a factoring oracle that factors k-digit numbers in O(1) time. What’s the largest prime we can currently generate in O(k) time? Trivially we can get a prime of size k or so (up to logs) by scanning [k+1,2k] for primes. If one assumes ABC and factors all the numbers in, say,![[2^k+1, 2^k+k]](https://s0.wp.com/latex.php?latex=%5B2%5Ek%2B1%2C+2%5Ek%2Bk%5D&bg=ffffff&fg=000000&s=0&c=20201002) , one can get a prime of size

, one can get a prime of size  at least (see my comment in II.10). But this is so far the best known.

at least (see my comment in II.10). But this is so far the best known.

Suppose we wanted to show that![[2^k+1,2^k+k]](https://s0.wp.com/latex.php?latex=%5B2%5Ek%2B1%2C2%5Ek%2Bk%5D&bg=ffffff&fg=000000&s=0&c=20201002) had an integer with a prime factor of at least

had an integer with a prime factor of at least  , say. If not, then all numbers here are

, say. If not, then all numbers here are  -smooth. I think this (perhaps with a dash of ABC) shows that all numbers here have a huge number of divisors –

-smooth. I think this (perhaps with a dash of ABC) shows that all numbers here have a huge number of divisors –  or so. So perhaps we can show that the divisor function

or so. So perhaps we can show that the divisor function  cannot be so huge on this interval?

cannot be so huge on this interval?

Comment by Terence Tao — August 15, 2009 @ 3:10 pm |

This might already have been discussed… Let , and

, and  be the largest prime factor of

be the largest prime factor of  . Since we have a factoring oracle, this is a deterministic way of generating primes, however, what is the best known lower bound on $\latex a_j$? It increases quite rapidly but it is not guaranteed that

. Since we have a factoring oracle, this is a deterministic way of generating primes, however, what is the best known lower bound on $\latex a_j$? It increases quite rapidly but it is not guaranteed that  . For example, the first elements of this sentence are

. For example, the first elements of this sentence are  .

.

Comment by Anonymous — August 16, 2009 @ 1:41 am |

I think the lower bound is something like 53. It will not go through all values there is a proof that some lower values are forbidden and it is not monotonic but I don’t think there are any nice lower bounds.

Comment by Kristal Cantwell — August 16, 2009 @ 3:25 am |

[…] current discussion thread for this project is here. Michael Nielsen has a wiki page that summarizes the known results and current ideas. Finally, […]

Pingback by Deterministic way of finding primes « Who groks in beauty? — August 16, 2009 @ 1:51 am |

I think that one can compute the parity of using only

using only  or so bit operations, but maybe I am mistaken. If I am right, then if one knows that, say, the interval

or so bit operations, but maybe I am mistaken. If I am right, then if one knows that, say, the interval ![[x,2x]](https://s0.wp.com/latex.php?latex=%5Bx%2C2x%5D&bg=ffffff&fg=000000&s=0&c=20201002) contains an odd number of primes (as given to one by an oracle, perhaps), then one can use a binary search to locate one using only about

contains an odd number of primes (as given to one by an oracle, perhaps), then one can use a binary search to locate one using only about  (times some logs) or so bit operations.

(times some logs) or so bit operations.

Here is the idea: it is fairly easy to compute

in time or so, using the fact that for

or so, using the fact that for  not a square,

not a square,  is twice the number of divisors of

is twice the number of divisors of  that are less than

that are less than  ; so, roughly, forgetting the contribution of the squares, the sum is

; so, roughly, forgetting the contribution of the squares, the sum is

Consider S modulo 4. An individual will be divisible by 4 if there are at least two primes that each divide

will be divisible by 4 if there are at least two primes that each divide  to an odd power, or a single prime that divides

to an odd power, or a single prime that divides  to a power that is 3 mod 4. So, the only

to a power that is 3 mod 4. So, the only  ‘s for which

‘s for which  is not divisible by 4 are the numbers of the form

is not divisible by 4 are the numbers of the form  ,

,  is prime not dividing

is prime not dividing  , along with the squares; and, we have that

, along with the squares; and, we have that  . This means that

. This means that

Note that this last sum can be computed using only about or so bit operations; and so, using only this many bit operations we can easily compute the parity of

or so bit operations; and so, using only this many bit operations we can easily compute the parity of

Taking into account the fact that there are at most numbers of the form

numbers of the form  ,

,  , it is easy to see that we can just as easily compute the parity of

, it is easy to see that we can just as easily compute the parity of

using only or so bit operations. But in fact we can just as quickly evaluate the parity of the sum

or so bit operations. But in fact we can just as quickly evaluate the parity of the sum

where will be chosen to depend on a parameter

will be chosen to depend on a parameter  below. The reason we can as quickly evaluate this sum (well, up to a factor

below. The reason we can as quickly evaluate this sum (well, up to a factor  or so) is that we just need to restrict our initial sum over

or so) is that we just need to restrict our initial sum over  to those

to those  that are coprime to

that are coprime to  .

.

Now let denote the time required to compute

denote the time required to compute  modulo 2. Then clearly we have that

modulo 2. Then clearly we have that

since to compute the parity of we just need to compute